Distributed Systems: Difference between revisions

| (54 intermediate revisions by 6 users not shown) | |||

| Line 27: | Line 27: | ||

In combination with the characteristics mentioned above, these result in several topical |

In combination with the characteristics mentioned above, these result in several topical |

||

areas for security. |

areas for security. |

||

First, distributing a system's components strengthens the importance of |

First, distributing a system's components strengthens the importance of access control. |

||

While access control in systems that are confined to single locations and specified groups of users |

|||

between components and of the system as a whole regarding its interactions with the |

|||

is a problem in itself, it becomes almost unsolvable when magnitudes of locations, |

|||

environment. |

|||

different persons in charge and unknown user groups are involved. |

|||

Consequently, we will focus on trust in the first section of the following text. |

|||

Consequently, we will focus on distributed access control in the first section of the following text. |

|||

Service requirements are the second field we will take a look at; here, our main aim |

Service requirements are the second field we will take a look at; here, our main aim |

||

will be outlining the implications of concurrency. |

will be outlining the implications of concurrency. |

||

| Line 37: | Line 38: | ||

fault tolerance research, which will lead us to the concept of intrusion tolerance. |

fault tolerance research, which will lead us to the concept of intrusion tolerance. |

||

==Distributed Access Control== |

|||

==Trust== |

|||

Access control refers to the restriction of access to facilities, property, information, ressources etc. |

|||

Access control is traditionally divided into the two subtasks of (1) authentication and (2) authorization. |

|||

(1) deals with identification. It is about knowing if the process or user trying to get access is in fact |

|||

the process or user it is claiming to be. |

|||

The second task is responsible for granting access to the requested ressource or information. |

|||

It will be shown here that in distributed systems both may merge to one. |

|||

=== Access Control Models === |

|||

Access control models are sets of formalized, concise security goals and properties, as well as methods |

|||

of enforcing them. |

|||

There are a number of well known access control models which usually have strong roots in the domain |

|||

they are applied to. |

|||

A lot of the research done in this area is not primarily rooted in distributed systems, so going from |

|||

the formal models approaches will be searched that are suitable for the specific tasks and requirements |

|||

of distributed systems. |

|||

==== Mandatory Access Control (MAC) ==== |

|||

MAC restricts access to objects on the basis of sensitivity and clearance. Sensitivity is usually represented by a label on the object. Clearance is an information about the principal requesting access. It involves formal authorization. MAC is the fundamental model for military access control. [http://en.wikipedia.org/wiki/Mandatory_access_control (MAC)] |

|||

==== Discretionary Access Control (DAC) ==== |

|||

The fundamental term in the access control model is "owner". The owner of an object is fully trusted to manage its security. Owners have or can aquire all rights to their objects. Furthermore owners may grant or deny access to groups or others. DAC is the model behind rights management in the unix world. [http://en.wikipedia.org/wiki/Discretionary_access_control (DAC)] |

|||

==== Role Based Access Control (RBAC) ==== |

|||

Roles reflect organizational functions. Access rights are granted on the basis of roles. Principals aquire roles and therefor access rights. [http://en.wikipedia.org/wiki/RBAC (RBAC)] |

|||

=== Distributed Authorization === |

|||

All described models are |

|||

==== Access Control Lists ==== |

|||

Access control lists are a common mechanism for access control. |

|||

The basic idea is to attach tupels mapping subjects to rights to objects. |

|||

This is a well known technique uitilized in operating systems such as Windows NT. |

|||

The idea is to authenticate the user and rely on existing access control lists for the authorization decision. In some cases remote users are mapped to local users. This approach does not allow for delegation and relies heavily on an existing authentication infrastructure, resulting in scalability problems. An example of this technique is [http://en.wikipedia.org/wiki/Kerberos_%28protocol%29 Kerberos], which is build on the Needham-Schroeder protocol. |

|||

==== Capability-based Authorization ==== |

|||

Capability based access control attaches tupels to subjects. The tupels map objects to access rights and are stored with every subject. The tupels may be extended by hashes. On creation of the object a secret may be created. The hash is constructed over the secret, an object identifier and the access rights. The hash protects against forgery, while allowing local and fast authorization. With the hash access rights may be granted locally, knowing the secret of the object. However capabilities of this kind may be stolen, leaving unanswered questions of revocation of capabilities. |

|||

==== Credential-based Authorization ==== |

|||

Some of the problems of capability based authorization can be answered when the hash is extended. ICAP developed by L.Gong also includes user identifiers with the hash, allowing for selective revocation, as well as preventing theft of credentials. Truly distributed security policies are possible. As stated above access control is traditionally divided into 2 tasks authentication andauthorization. The credential based approach, being the most sophisticated so far delivers 2 possible solutions to the whole problem of access control. The first (identitiy based)leaves the distinction between authentication and authorization true, while the second (key-based) merges the 2 processes into one. |

|||

===== Identity-based Credentials ===== |

|||

Identity oriented access control is based on names. Names bind access rights to principals/subjects. This seperates the process of controlling access into establishing the identity of a principal (map name to principal) and maping the established name to access rights. Authentication is usually done via public key infrastructures(PKI), while authorization just maps names to access rights (this can be done via ACL). Usually well known and tested standards like X.509 are used. |

|||

Names however comprise a whole new set of problems when used in distributed systems. Certain uniqueness and stability constraints must be met. Central naming authorities need to be established. |

|||

===== Key-based Credentials ===== |

|||

In key-based systems the need for central naming authorities is eliminated. Every principal generates own public/private key pair. Public keys are used as global identifiers. Every service is responsible for authentication and authorization. Meaning access decisions are made local. An example of this kind of access control is [http://world.std.com/~cme/spki.txt SPKI]. |

|||

==Concurrency== |

==Concurrency== |

||

Concurrency is when processes or tasks run at the same time. A lot of problems of concurrent processes arise from the access to data or ressources. This involves certain constraints on data, like consistency, as well as from different access protocol problems, e.g. deadlocks. Usually protocols are designed to solve these problems. A lot of research has been done in the field of databases which deal with large amounts of data and concurrent access problems. This includes concurrency control protocols, deadlocks, transaction concepts. However there are a number of problems that are inherently security related. Some of these will be discussed here. |

|||

=== Old Data === |

|||

This involves the use of old data in replay attacks, by for instance using old or outdated credentials. As well as race conditions and ''TOCTTOU'' problems. ''TOCTTOU'' means "time-check-to-time-of-use" and specifically refers to timing or synchronization flaws arising from different characteristics of an object at different points in time. ''TOCTTOU'' problems are a subclass of state propagation problems. These arise in large distributed systems, where it may be impossible to hold data about every object available to every entity at any point in time. Examples include public key certificate revocation, as well as hot credit card revocation problems. The usual approach is multi-layer-authorization, which tries to minimize the impact of security state changes by triggering authorization based on the importance or impact of transactions. |

|||

=== Inconsistent Updates === |

|||

This refers to the problem of leaving data in inconsistent states. Where consistency is usually a set of "a priori" rules the data must meet. This includes lost updates and incosistent read/write sequences. Usually locking protocols (like 2-Phase-Locking) are applied to solve these problems. These protocols however give rise to the well studied phenomenon of deadlocks. Another way of dealing with the update of data are callback mechanisms. |

|||

=== Time === |

|||

Accurate time is needed for a number of security related protocols. This makes providing accurate time a security related problem itself. There are a number of time specific attacks, like the cinderella attack. One example of such an attack is the disabling of security software by manipulating the time, so that specific licenses to use the software expire. Solutions to the problem of secure time include NTP (Network time protocol), which provides for the authentication of time sources, as well as a voting mechanism, reducing the likelihood of manipulations. |

|||

==Fault Tolerance== |

==Fault Tolerance== |

||

As laid out in the introduction, one main reason for deploying a system in a distributed |

|||

manner is a particular, task-specific demand on the service it provides. |

|||

Obviously, such a demand also leads to certain requirements regarding the service's |

|||

availability, e.g., the mobile-phone system is of little use if it does not work. |

|||

===Insights from Dependability=== |

|||

Dependability research has obtained a few insights into the complexities of |

|||

ensuring availability as well as into the effectiveness of possible solutions. |

|||

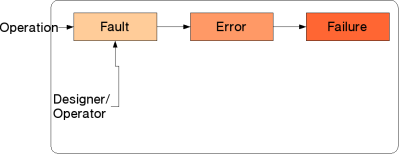

Its most basic model is the famous "Fault-Error-Failure" sequence of |

|||

how computer systems (of any kind) end up in a failure state, i.e., |

|||

not conforming to specified system or service behaviour (The figures are |

|||

based on those in Verissimo et al.): |

|||

[[Image:Distributed-systems.ft-1.png|The Classical Fault-Error-Failure model.]] |

|||

A fault -- some minor inconsistency with the specification, introduced by the |

|||

operator or the designer -- in a component is triggered and leads to an error |

|||

-- a state where a part of the component does not conform to its specified |

|||

behaviour --, which, if untreated, eventually results in the failure of |

|||

the component. |

|||

For example, a single bit in a memory module may permanently stay at value |

|||

1 under certain environment conditions. |

|||

When a program uses the module's value as part of an address and this address |

|||

happens to be outside the program's memory range, the program could be |

|||

killed by the OS and may fail to provide its service. |

|||

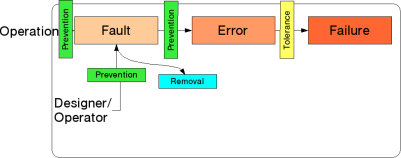

The chain just described can be broken at several points: First, fault and |

|||

error prevention measures can be applied to stop the introduction of faults |

|||

and their resulting in errors, both by design as well as by operation. |

|||

Second, one can seek to remove faults after identifying them. |

|||

However, common experience says that neither can be fully effective, and |

|||

thus there will always be faults. |

|||

Taking this into account, one has to tolerate said faults -- i.e. find ways |

|||

to prevent them from causing failures: |

|||

[[Image:Distributed-systems.ft-2.png]] |

|||

Fault Tolerance approaches in general rely on the introduction of redundancy in |

|||

the system, meaning redundancy in space (e.g., having backup components -- "spares" -- |

|||

or replicated components voting on the correct results), in time (e.g., repeating |

|||

calculations or employing restarts) and combinations thereof. |

|||

Based on assumptions regarding fault classes, fault distributions and failure |

|||

models, a system's availability (and thus the effectiveness of methods) can |

|||

be quantified, e.g., "five nines." |

|||

===Interplay between Fault Tolerance measures and System Security=== |

|||

Behold, the fool saith, "Put not all thine eggs in the one basket" -- which is |

|||

but a manner of saying, "Scatter your money and your attention;" but the wise |

|||

man saith, "Put all your eggs in the one basket and -- WATCH THAT BASKET." |

|||

:-- Mark Twain, "Pudd'nhead Wilson's Calendar" |

|||

Since the fault tolerance techniques just mentioned constitute a significant |

|||

change in system design, their effects on system security must be taken into |

|||

account. |

|||

One example is replication: Obviously, it quickly becomes impossible to |

|||

ascertain physical security of replicated components when these are |

|||

distributed geographically in order to ensure |

|||

the service availability (e.g., another ATM could be within walking distance, |

|||

so the service is still available). |

|||

Furthermore, one also has to look at possible effects of security measures |

|||

on fault tolerance. |

|||

It may be much easier to secure a single server than a whole network -- but |

|||

by doing so a single point of failure is created: this component's failure |

|||

alone constitues a system failure. |

|||

===Intrusion Tolerance=== |

|||

So, obviously fault tolerance and security can interfere, but can the security |

|||

field learn from fault tolerance, and if so, are there limits in regard to |

|||

applying its results? |

|||

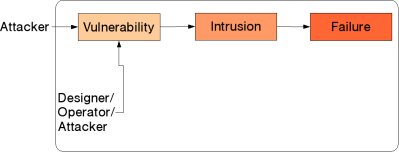

The first point that comes up in answering the question is the similarity |

|||

of the basic models. One can identify the concept of a fault with that of a |

|||

vulnerability, which, when triggered by an attack -- i.e., an action by |

|||

the environment -- leads to an intrusion, which in turn may lead to a failure |

|||

in the security domain, that is, the system failing to meet some or all of its |

|||

stated security properties such as confidentiality: |

|||

[[Image:Distributed-systems.it-1.png]] |

|||

This has been dubbed the AVI (Attack-Vulnerability-Intrusion) model. |

|||

The picture above differs from the classical model in that faults may also |

|||

be introduced by the environment. E.g., an attacker, having already gained |

|||

partial access, may attempt to introduce additional vulnerabilities. |

|||

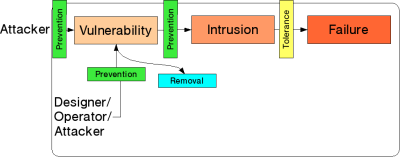

Nonetheless, the models are almost identical, and unsurprisingly the classes |

|||

of methods are as well: |

|||

[[Image:Distributed-systems.it-2.png]] |

|||

Here, prevention and removal of vulnerabilities are achieved through hardening |

|||

systems by e.g. employing secure design methods and patching |

|||

security holes, respectively, which is the classical security paradigm. |

|||

One conclusion from dependability research is that neither prevention nor |

|||

removal can be considered perfect, so one has to doubt whether assuming them to be |

|||

sufficient in the security area is reasonable -- and the more a system grows, the |

|||

less convincing it is to state that all parts can be made perfectly secure. |

|||

The idea of fault tolerance stems from the very same fact, so intrusion tolerance, |

|||

that is, accepting the inevitability of intrusions and working around them, should |

|||

be considered. |

|||

Well-studied fault tolerance approaches may be useful. To apply them, the types |

|||

of possible faults must be identified. |

|||

In the security field, the most obvious model is that faults are of "malicious" |

|||

nature, that is, one has to assume a faulty component to work in a way that |

|||

aims at causing the worst possible failure. |

|||

In other words: there are no limits to what it can do, which corresponds to |

|||

FT's "Byzantine behaviour." |

|||

Unfortunately, methods of tolerating Byzantine faults are expensive in terms |

|||

of communication and computing resources as well as in regard to component |

|||

requirements, which may make them infeasible. |

|||

On the other hand, more restricted models always introduce the danger of assuming |

|||

non-existant properties. |

|||

Nonetheless, if these properties can be assured, hybrid models with different |

|||

fault models for different components of a system can become useful. |

|||

For example, employing devices that are physically tamper-resistant in such |

|||

a way that they stop working in case someone tries to open the case allows to |

|||

utilize the "crash" model for these components, which is much easier to handle. |

|||

===A word of caution concerning applicability=== |

|||

It is tempting to simply copy approaches, assuming system security to emerge |

|||

in the same way fault tolerance does, but a well-known example shows that this |

|||

may be misleading: |

|||

Consider the famous Byzantine Generals problem: n principals have to come to |

|||

the same result to complete a task, so they must communicate. |

|||

There are a number of traitors amongst them, these t traitors are allowed |

|||

to act in an arbitray way. As Lamport showed, the problem can be solved if |

|||

and only if n ≥ 3t+1 holds. |

|||

A system consisting of n ≥ 3t+1 servers trying to agree on one result can be |

|||

deemed tolerant to t failures. |

|||

If -- as is the case in most fault tolerance scenarios -- component failures |

|||

happen independently, such a system is fault tolerant. |

|||

In the security area, though, the implicit independency assumption becomes |

|||

troublesome. |

|||

In a system comprised by n machines sharing the same easily-exploited |

|||

vulnerability, it is clearly violated, so the replication does not provide |

|||

any additional security. |

|||

From that it follows that independency of compromises must somehow be ensured, |

|||

which can be done in several ways. |

|||

One is utilizing the traditional prevention paradigm: Harden the components |

|||

to avoid easily-exploitable vulnerabilities, for example, physical tamper |

|||

resistance in combination with effective access controls can prevent nodes |

|||

from becoming Byzantine. |

|||

Another option is to use proactive recovery, a bundle of measures that |

|||

share the characteristic of periodically resetting components to a known |

|||

(i.e. secure) state and are thus quivalent to Rejuvenation in Fault Tolerance. |

|||

This parallel is obvious in the approach to restore an uncompromised state |

|||

from read-only media every once in a while by restarting nodes. |

|||

The concept is also applied to cryptographic protocols by changing keys |

|||

("re-keying") or certificates on a regular basis, which renders previously |

|||

gathered keys unusable. |

|||

===An Example: COCA=== |

|||

Some of these concepts are illustrated by the Cornell Online Certificate Authority, |

|||

a distributed system of n nodes providing CA services to outside clients that withstands |

|||

t intrusions as long as n ≥ 3t+1 holds. |

|||

Here, intrusion tolerance results from applying Byzantine Agreement in the |

|||

incarnation of threshold signatures. |

|||

All replies from the CA as well as all updates to it must be signed by the |

|||

CA's service key, which consists of a public and a private part. |

|||

The latter is scattered over the COCA servers, no single COCA server |

|||

knows the whole private key. |

|||

Instead, to sign messages with the service key, t+1 servers must collaborate, |

|||

which forces an attacker to compromise t+1 servers in order to have the CA |

|||

deliver false certificates. |

|||

To ensure independency of intrusions, proactive recovery is applied through |

|||

frequent changes in the private key fragments. |

|||

==Bibliography== |

|||

# Couloris, Dollimore, Kindberg: Verteilte Systeme (2002) |

|||

# Verissimo, Neves, Correia: Intrusion-Tolerant Architectures: Concepts and Design (2003) |

|||

# Zhou, Schneider, van Renesse: COCA: A Secure Distributed On-Line Certification Authority (2002) |

|||

# Yao: Trust Management for Widely Distributed Systems (2003) |

|||

==Heap of unused text== |

==Heap of unused text== |

||

Latest revision as of 11:59, 4 January 2005

Introduction

Out of the many possible definitions of a distributed system, we herein employ the following, which is perhaps the most general (and in particular supercedes Lamport's humorous remark that "a distributed system is the one that prevents you from working because of the failure of a machine that you had never heard of"):

- Distributed System

A distributed system is comprised by a set of distributed (i.e., not located in the same spot) components (hardware and/or software) working together to provide one service.

That is, there are two characteristics to a distributed system: (1) the service it provides emerges at the system level and (2) components must communicate over links (which are components themselves). The first implies that parts of the system, although they might be usable stand-alone, are not what we mean by the term -- a cell-phone's games or calendar functions are not distributed, but the service "communicate by voice independently of location" is. The second narrows down the range: programs on the same host and communicating over IPC are not considered distributed. Likewise, both points affect security of such a system. Consistent with (1), in a distributed setting new security problems arise, which are not limited to (2), that communication is potentially dangerous.

There are at least two main reasons for distribution: The service may require it (e.g. credit card systems have to be available in many locations) or availability considerations may make it necessary to remove single points of failure by replicating components. In combination with the characteristics mentioned above, these result in several topical areas for security. First, distributing a system's components strengthens the importance of access control. While access control in systems that are confined to single locations and specified groups of users is a problem in itself, it becomes almost unsolvable when magnitudes of locations, different persons in charge and unknown user groups are involved. Consequently, we will focus on distributed access control in the first section of the following text. Service requirements are the second field we will take a look at; here, our main aim will be outlining the implications of concurrency. Thirdly, we will briefly consider the interplay of fault tolerance and security and explore how -- and if at all -- security of distributed systems can benefit from fault tolerance research, which will lead us to the concept of intrusion tolerance.

Distributed Access Control

Access control refers to the restriction of access to facilities, property, information, ressources etc. Access control is traditionally divided into the two subtasks of (1) authentication and (2) authorization. (1) deals with identification. It is about knowing if the process or user trying to get access is in fact the process or user it is claiming to be. The second task is responsible for granting access to the requested ressource or information. It will be shown here that in distributed systems both may merge to one.

Access Control Models

Access control models are sets of formalized, concise security goals and properties, as well as methods of enforcing them. There are a number of well known access control models which usually have strong roots in the domain they are applied to. A lot of the research done in this area is not primarily rooted in distributed systems, so going from the formal models approaches will be searched that are suitable for the specific tasks and requirements of distributed systems.

Mandatory Access Control (MAC)

MAC restricts access to objects on the basis of sensitivity and clearance. Sensitivity is usually represented by a label on the object. Clearance is an information about the principal requesting access. It involves formal authorization. MAC is the fundamental model for military access control. (MAC)

Discretionary Access Control (DAC)

The fundamental term in the access control model is "owner". The owner of an object is fully trusted to manage its security. Owners have or can aquire all rights to their objects. Furthermore owners may grant or deny access to groups or others. DAC is the model behind rights management in the unix world. (DAC)

Role Based Access Control (RBAC)

Roles reflect organizational functions. Access rights are granted on the basis of roles. Principals aquire roles and therefor access rights. (RBAC)

Distributed Authorization

All described models are

Access Control Lists

Access control lists are a common mechanism for access control. The basic idea is to attach tupels mapping subjects to rights to objects. This is a well known technique uitilized in operating systems such as Windows NT. The idea is to authenticate the user and rely on existing access control lists for the authorization decision. In some cases remote users are mapped to local users. This approach does not allow for delegation and relies heavily on an existing authentication infrastructure, resulting in scalability problems. An example of this technique is Kerberos, which is build on the Needham-Schroeder protocol.

Capability-based Authorization

Capability based access control attaches tupels to subjects. The tupels map objects to access rights and are stored with every subject. The tupels may be extended by hashes. On creation of the object a secret may be created. The hash is constructed over the secret, an object identifier and the access rights. The hash protects against forgery, while allowing local and fast authorization. With the hash access rights may be granted locally, knowing the secret of the object. However capabilities of this kind may be stolen, leaving unanswered questions of revocation of capabilities.

Credential-based Authorization

Some of the problems of capability based authorization can be answered when the hash is extended. ICAP developed by L.Gong also includes user identifiers with the hash, allowing for selective revocation, as well as preventing theft of credentials. Truly distributed security policies are possible. As stated above access control is traditionally divided into 2 tasks authentication andauthorization. The credential based approach, being the most sophisticated so far delivers 2 possible solutions to the whole problem of access control. The first (identitiy based)leaves the distinction between authentication and authorization true, while the second (key-based) merges the 2 processes into one.

Identity-based Credentials

Identity oriented access control is based on names. Names bind access rights to principals/subjects. This seperates the process of controlling access into establishing the identity of a principal (map name to principal) and maping the established name to access rights. Authentication is usually done via public key infrastructures(PKI), while authorization just maps names to access rights (this can be done via ACL). Usually well known and tested standards like X.509 are used. Names however comprise a whole new set of problems when used in distributed systems. Certain uniqueness and stability constraints must be met. Central naming authorities need to be established.

Key-based Credentials

In key-based systems the need for central naming authorities is eliminated. Every principal generates own public/private key pair. Public keys are used as global identifiers. Every service is responsible for authentication and authorization. Meaning access decisions are made local. An example of this kind of access control is SPKI.

Concurrency

Concurrency is when processes or tasks run at the same time. A lot of problems of concurrent processes arise from the access to data or ressources. This involves certain constraints on data, like consistency, as well as from different access protocol problems, e.g. deadlocks. Usually protocols are designed to solve these problems. A lot of research has been done in the field of databases which deal with large amounts of data and concurrent access problems. This includes concurrency control protocols, deadlocks, transaction concepts. However there are a number of problems that are inherently security related. Some of these will be discussed here.

Old Data

This involves the use of old data in replay attacks, by for instance using old or outdated credentials. As well as race conditions and TOCTTOU problems. TOCTTOU means "time-check-to-time-of-use" and specifically refers to timing or synchronization flaws arising from different characteristics of an object at different points in time. TOCTTOU problems are a subclass of state propagation problems. These arise in large distributed systems, where it may be impossible to hold data about every object available to every entity at any point in time. Examples include public key certificate revocation, as well as hot credit card revocation problems. The usual approach is multi-layer-authorization, which tries to minimize the impact of security state changes by triggering authorization based on the importance or impact of transactions.

Inconsistent Updates

This refers to the problem of leaving data in inconsistent states. Where consistency is usually a set of "a priori" rules the data must meet. This includes lost updates and incosistent read/write sequences. Usually locking protocols (like 2-Phase-Locking) are applied to solve these problems. These protocols however give rise to the well studied phenomenon of deadlocks. Another way of dealing with the update of data are callback mechanisms.

Time

Accurate time is needed for a number of security related protocols. This makes providing accurate time a security related problem itself. There are a number of time specific attacks, like the cinderella attack. One example of such an attack is the disabling of security software by manipulating the time, so that specific licenses to use the software expire. Solutions to the problem of secure time include NTP (Network time protocol), which provides for the authentication of time sources, as well as a voting mechanism, reducing the likelihood of manipulations.

Fault Tolerance

As laid out in the introduction, one main reason for deploying a system in a distributed manner is a particular, task-specific demand on the service it provides. Obviously, such a demand also leads to certain requirements regarding the service's availability, e.g., the mobile-phone system is of little use if it does not work.

Insights from Dependability

Dependability research has obtained a few insights into the complexities of ensuring availability as well as into the effectiveness of possible solutions. Its most basic model is the famous "Fault-Error-Failure" sequence of how computer systems (of any kind) end up in a failure state, i.e., not conforming to specified system or service behaviour (The figures are based on those in Verissimo et al.):

A fault -- some minor inconsistency with the specification, introduced by the operator or the designer -- in a component is triggered and leads to an error -- a state where a part of the component does not conform to its specified behaviour --, which, if untreated, eventually results in the failure of the component. For example, a single bit in a memory module may permanently stay at value 1 under certain environment conditions. When a program uses the module's value as part of an address and this address happens to be outside the program's memory range, the program could be killed by the OS and may fail to provide its service.

The chain just described can be broken at several points: First, fault and error prevention measures can be applied to stop the introduction of faults and their resulting in errors, both by design as well as by operation. Second, one can seek to remove faults after identifying them. However, common experience says that neither can be fully effective, and thus there will always be faults. Taking this into account, one has to tolerate said faults -- i.e. find ways to prevent them from causing failures:

Fault Tolerance approaches in general rely on the introduction of redundancy in the system, meaning redundancy in space (e.g., having backup components -- "spares" -- or replicated components voting on the correct results), in time (e.g., repeating calculations or employing restarts) and combinations thereof. Based on assumptions regarding fault classes, fault distributions and failure models, a system's availability (and thus the effectiveness of methods) can be quantified, e.g., "five nines."

Interplay between Fault Tolerance measures and System Security

Behold, the fool saith, "Put not all thine eggs in the one basket" -- which is but a manner of saying, "Scatter your money and your attention;" but the wise man saith, "Put all your eggs in the one basket and -- WATCH THAT BASKET."

- -- Mark Twain, "Pudd'nhead Wilson's Calendar"

Since the fault tolerance techniques just mentioned constitute a significant change in system design, their effects on system security must be taken into account. One example is replication: Obviously, it quickly becomes impossible to ascertain physical security of replicated components when these are distributed geographically in order to ensure the service availability (e.g., another ATM could be within walking distance, so the service is still available). Furthermore, one also has to look at possible effects of security measures on fault tolerance. It may be much easier to secure a single server than a whole network -- but by doing so a single point of failure is created: this component's failure alone constitues a system failure.

Intrusion Tolerance

So, obviously fault tolerance and security can interfere, but can the security field learn from fault tolerance, and if so, are there limits in regard to applying its results?

The first point that comes up in answering the question is the similarity of the basic models. One can identify the concept of a fault with that of a vulnerability, which, when triggered by an attack -- i.e., an action by the environment -- leads to an intrusion, which in turn may lead to a failure in the security domain, that is, the system failing to meet some or all of its stated security properties such as confidentiality:

This has been dubbed the AVI (Attack-Vulnerability-Intrusion) model. The picture above differs from the classical model in that faults may also be introduced by the environment. E.g., an attacker, having already gained partial access, may attempt to introduce additional vulnerabilities. Nonetheless, the models are almost identical, and unsurprisingly the classes of methods are as well:

Here, prevention and removal of vulnerabilities are achieved through hardening systems by e.g. employing secure design methods and patching security holes, respectively, which is the classical security paradigm. One conclusion from dependability research is that neither prevention nor removal can be considered perfect, so one has to doubt whether assuming them to be sufficient in the security area is reasonable -- and the more a system grows, the less convincing it is to state that all parts can be made perfectly secure. The idea of fault tolerance stems from the very same fact, so intrusion tolerance, that is, accepting the inevitability of intrusions and working around them, should be considered.

Well-studied fault tolerance approaches may be useful. To apply them, the types of possible faults must be identified. In the security field, the most obvious model is that faults are of "malicious" nature, that is, one has to assume a faulty component to work in a way that aims at causing the worst possible failure. In other words: there are no limits to what it can do, which corresponds to FT's "Byzantine behaviour." Unfortunately, methods of tolerating Byzantine faults are expensive in terms of communication and computing resources as well as in regard to component requirements, which may make them infeasible. On the other hand, more restricted models always introduce the danger of assuming non-existant properties. Nonetheless, if these properties can be assured, hybrid models with different fault models for different components of a system can become useful. For example, employing devices that are physically tamper-resistant in such a way that they stop working in case someone tries to open the case allows to utilize the "crash" model for these components, which is much easier to handle.

A word of caution concerning applicability

It is tempting to simply copy approaches, assuming system security to emerge in the same way fault tolerance does, but a well-known example shows that this may be misleading:

Consider the famous Byzantine Generals problem: n principals have to come to the same result to complete a task, so they must communicate. There are a number of traitors amongst them, these t traitors are allowed to act in an arbitray way. As Lamport showed, the problem can be solved if and only if n ≥ 3t+1 holds.

A system consisting of n ≥ 3t+1 servers trying to agree on one result can be deemed tolerant to t failures. If -- as is the case in most fault tolerance scenarios -- component failures happen independently, such a system is fault tolerant. In the security area, though, the implicit independency assumption becomes troublesome. In a system comprised by n machines sharing the same easily-exploited vulnerability, it is clearly violated, so the replication does not provide any additional security.

From that it follows that independency of compromises must somehow be ensured, which can be done in several ways. One is utilizing the traditional prevention paradigm: Harden the components to avoid easily-exploitable vulnerabilities, for example, physical tamper resistance in combination with effective access controls can prevent nodes from becoming Byzantine. Another option is to use proactive recovery, a bundle of measures that share the characteristic of periodically resetting components to a known (i.e. secure) state and are thus quivalent to Rejuvenation in Fault Tolerance. This parallel is obvious in the approach to restore an uncompromised state from read-only media every once in a while by restarting nodes. The concept is also applied to cryptographic protocols by changing keys ("re-keying") or certificates on a regular basis, which renders previously gathered keys unusable.

An Example: COCA

Some of these concepts are illustrated by the Cornell Online Certificate Authority, a distributed system of n nodes providing CA services to outside clients that withstands t intrusions as long as n ≥ 3t+1 holds.

Here, intrusion tolerance results from applying Byzantine Agreement in the incarnation of threshold signatures. All replies from the CA as well as all updates to it must be signed by the CA's service key, which consists of a public and a private part. The latter is scattered over the COCA servers, no single COCA server knows the whole private key. Instead, to sign messages with the service key, t+1 servers must collaborate, which forces an attacker to compromise t+1 servers in order to have the CA deliver false certificates. To ensure independency of intrusions, proactive recovery is applied through frequent changes in the private key fragments.

Bibliography

- Couloris, Dollimore, Kindberg: Verteilte Systeme (2002)

- Verissimo, Neves, Correia: Intrusion-Tolerant Architectures: Concepts and Design (2003)

- Zhou, Schneider, van Renesse: COCA: A Secure Distributed On-Line Certification Authority (2002)

- Yao: Trust Management for Widely Distributed Systems (2003)

Heap of unused text

Distributed systems allow parts of the system to be located on separate computers and different locations. So business logic and data can be reached from any remote computer (location).

Distributed objects are the most recent development in distributed computing. Distributed object technologies such as Java RMI, CORBA, and DCOM allow objects running on one machine to be used by applications on different computers.

There was a question in the seminar regarding the splitting of secret keys. Read about one possibility at Secret Sharing.