Log-Structured Filesystem: Difference between revisions

| (16 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

A log-structured filesystem is a novel disk storage management technique developed in the late 1980's by John K. Ousterhout and Fred Douglis. It stores data sequentially on the disk rather than scattering blocks all over the disk as most other filesystems do. |

A log-structured filesystem is a novel disk storage management technique developed in the late 1980's by John K. Ousterhout and Fred Douglis. It stores data sequentially on the disk rather than scattering blocks all over the disk as most other filesystems do. |

||

= Overview = |

= Overview = |

||

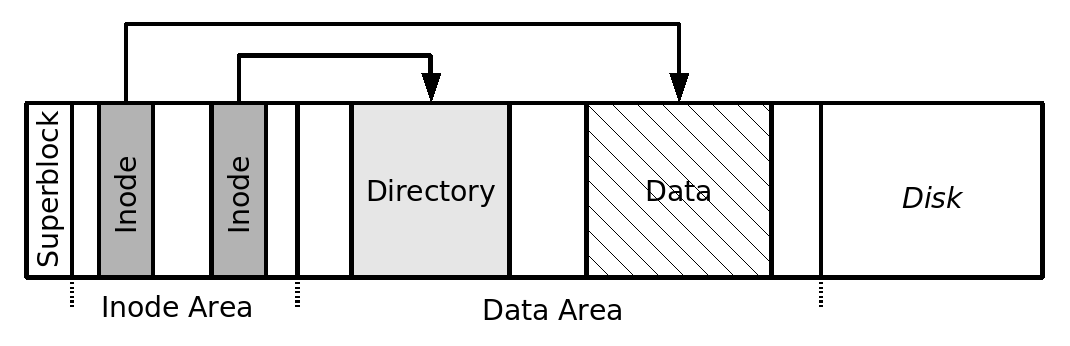

[[Image:lfs-layout-classic.png|thumb|right| |

[[Image:lfs-layout-classic.png|thumb|right|500px|'''Figure 1:''' Layout of classic inode-based filesystems (simplified)]] |

||

Most inode-based filesystems store inode and data blocks seperately in fixed regions on the disk. '''Figure 1''' shows a simplified disk layout of classic inode-based filesystems. This layout induces a substantial ''seek'' overhead when writing files (with read operations this overhead can be ''cached away''). When writing a file the data blocks are written first, then the inode of the file, then the corresponding directory block is updated, then its associated inode is updated. All these operations are preceded by seek operations to locate the on-disk position of the block that is to be accessed. With workloads like those found in office applications that work on many, small files the seek operations consume up to 80% of the raw disk bandwidth. |

|||

The log-structured filesystem tries to remove this overhead by eliminating all seeks and thus reclaiming almost 100% of the disk bandwidth for write operations. |

|||

<br style="clear:both" /> |

|||

= LFS in Detail = |

= LFS in Detail = |

||

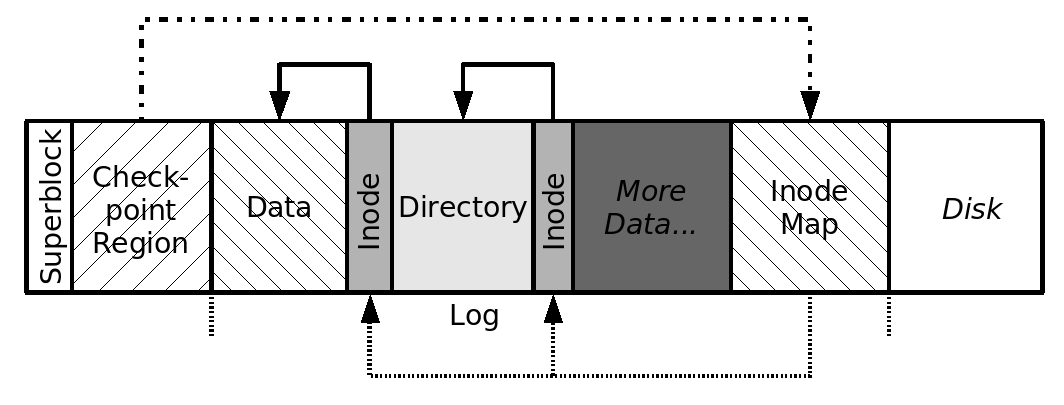

[[Image:Lfs-layout-log.png|thumb|right|500px|'''Figure 2:''' Layout of a log-structured filesystem (simplified)]] |

|||

The basic idea of a log-structured filesystem is to store all information sequentially on disk in a ''log'' structure. This includes all data blocks, inode blocks, directory blocks, indirect blocks, etc. |

The basic idea of a log-structured filesystem is to store all information sequentially on disk in a ''log'' structure. This includes all data blocks, inode blocks, directory blocks, indirect blocks, etc. New information is buffered in a write cache and written to disk in a single, large write operation. This way many, small, synchronous writes of traditional filesystems are translated to few, large, asynchronous, sequential write operations. |

||

== Writing Data == |

|||

== Reading Data == |

== Reading and Writing Data == |

||

One problem that obviously arises from this layout is that since inodes are not at fixed positions some way must be found to locate inodes. This is achieved by storing extra information in the log, the ''Inode Map Blocks''. Inode map blocks store information about all inodes written during one write operation. To keep track of all inode map blocks they are referenced from the ''Checkpoint region''. The inode map is compact enought to be kept in memory, so inode map lookups rarely require disk access. The inode number is used as and index into the inode map and yields the block address of the inode block on disk. From this point on the number of I/Os necessary are the same as in traditional filesystem. With the assumption that files are cached and that caches are large enough to satisfy the majority of the read requests, the LFS performs as good as FFS or better. |

|||

== Free Space Management == |

== Free Space Management == |

||

A more challenging design issue with LFS is the management of free space. The goal here is to maintain large free space to write new date. Unfortunately by the time the log reaches the end of the disk, free space is likely to be fragmented into many small extents. There are basically two choices of how to reuse free data blocks: ''Threading'' and ''Copying''. |

|||

| ⚫ | |||

==== Greedy Cleaning ==== |

|||

''Threading'' means, that the filesystem by some means keeps track of freed blocks and reuses these blocks when writing new data. This is what many other filesystems actually do and where a lot of effort is put into, to make it perform well. The big downside with this choice in LFS is that threading causes severe fragmentation. At some point large, continguous write are impossible, so a lot of time is spent with seeking again and the LFS will perform no better than other filesystems. |

|||

==== Cost / Benefit Cleaning ==== |

|||

''Copying'' on the other side means that live data is copied to contingous blocks (defragmented) and free data blocks are collected to large groups. This avoids fragmentation but the copying is rather expensive. Furthermore long lived files that might remain unmodified for a long time will be copied over and over again. |

|||

SpriteLFS, the prototype implementation of LFS, solves this problem by using a combination of copying and threading. The disk is divided into large, fix sized extents called ''Segments''. These segments are written sequentially from beginning to end. However all live data has to be copied out of a segment before it can be reused. Long lived data is tried to be grouped in segments. These segments of long lived data can then be skipped over to avoid copying the same data over and over again. |

|||

| ⚫ | |||

The process of copying live data out of segments is called ''Segment Cleaning'' and basically takes three steps: Read a number of segments into memory, identify the live data blocks and write the back to a smaller number of clean segments. In order to identify live data blocks when cleaning segments SpriteLFS writes additional data to the log, the ''Segment Summary Block'' and the ''Segment Usage Table Block''. |

|||

The segment summary block identifies all data that is written to the segment in question. For a data block, for example, the segment summary block contains the associated inode number and the offset of the data block within the file aswell as a file-version number that is also stored in the inode. Comparing this version number from the segment summary block with the one stored in the inode indicates if the data block is still in use. |

|||

The segment usage table records how many bytes are still live in a segment and the most recent modification time of any block in the segment. Just like the inode map the segment usage table is kept in memory and all segment usage table blocks are referenced from the checkpoint region. |

|||

Although this information imposes some overhead when writing the data it is useful not only for the cleaning process but also for crash recovery. Another big advantage is that, in contrast to other filesystems, there is no central data structure that stores free blocks. This saves memory, disk capacity and greatly reduces the complexity of filesystem code and crash recovery. |

|||

== Crash Recovery == |

== Crash Recovery == |

||

A position in the log where the filesystem is in a consistent state is called ''Checkpoint'' in LFS. The checkpoint is stored in a fixed position on disk, the ''Checkpoint Region''. It contains addresses of all inode map blocks and segment usage table blocks, a pointer to the last segment written and a timestamp. The checkpoint region is updated periodically or after a given amount of data has been written. In event of a system crash the checkpoint region is read during reboot and used to initialize the data structures. This is sufficient to bring the filesystem into a consistent state. |

|||

= LFS = |

|||

= Links = |

= Links = |

||

*[http://citeseer.ist.psu.edu/rosenblum91design.html Citeseer: Mendel Rosenblum, John K. Ousterhout - The Design and Implementation of a Log-Structured File System] |

|||

*[http://en.wikipedia.org/wiki/Log-structured_filesystem Wikipedia article about log-structured filesystem] |

|||

*[http://nilfs.org/ NILFS Homepage - A log-structured filesystem for linux] |

|||

*[http://www.opensolaris.org/os/community/zfs Sun's ZFS development page] |

|||

Latest revision as of 15:04, 1 February 2007

A log-structured filesystem is a novel disk storage management technique developed in the late 1980's by John K. Ousterhout and Fred Douglis. It stores data sequentially on the disk rather than scattering blocks all over the disk as most other filesystems do.

Overview

Most inode-based filesystems store inode and data blocks seperately in fixed regions on the disk. Figure 1 shows a simplified disk layout of classic inode-based filesystems. This layout induces a substantial seek overhead when writing files (with read operations this overhead can be cached away). When writing a file the data blocks are written first, then the inode of the file, then the corresponding directory block is updated, then its associated inode is updated. All these operations are preceded by seek operations to locate the on-disk position of the block that is to be accessed. With workloads like those found in office applications that work on many, small files the seek operations consume up to 80% of the raw disk bandwidth.

The log-structured filesystem tries to remove this overhead by eliminating all seeks and thus reclaiming almost 100% of the disk bandwidth for write operations.

LFS in Detail

The basic idea of a log-structured filesystem is to store all information sequentially on disk in a log structure. This includes all data blocks, inode blocks, directory blocks, indirect blocks, etc. New information is buffered in a write cache and written to disk in a single, large write operation. This way many, small, synchronous writes of traditional filesystems are translated to few, large, asynchronous, sequential write operations.

Reading and Writing Data

One problem that obviously arises from this layout is that since inodes are not at fixed positions some way must be found to locate inodes. This is achieved by storing extra information in the log, the Inode Map Blocks. Inode map blocks store information about all inodes written during one write operation. To keep track of all inode map blocks they are referenced from the Checkpoint region. The inode map is compact enought to be kept in memory, so inode map lookups rarely require disk access. The inode number is used as and index into the inode map and yields the block address of the inode block on disk. From this point on the number of I/Os necessary are the same as in traditional filesystem. With the assumption that files are cached and that caches are large enough to satisfy the majority of the read requests, the LFS performs as good as FFS or better.

Free Space Management

A more challenging design issue with LFS is the management of free space. The goal here is to maintain large free space to write new date. Unfortunately by the time the log reaches the end of the disk, free space is likely to be fragmented into many small extents. There are basically two choices of how to reuse free data blocks: Threading and Copying.

Threading means, that the filesystem by some means keeps track of freed blocks and reuses these blocks when writing new data. This is what many other filesystems actually do and where a lot of effort is put into, to make it perform well. The big downside with this choice in LFS is that threading causes severe fragmentation. At some point large, continguous write are impossible, so a lot of time is spent with seeking again and the LFS will perform no better than other filesystems.

Copying on the other side means that live data is copied to contingous blocks (defragmented) and free data blocks are collected to large groups. This avoids fragmentation but the copying is rather expensive. Furthermore long lived files that might remain unmodified for a long time will be copied over and over again.

SpriteLFS, the prototype implementation of LFS, solves this problem by using a combination of copying and threading. The disk is divided into large, fix sized extents called Segments. These segments are written sequentially from beginning to end. However all live data has to be copied out of a segment before it can be reused. Long lived data is tried to be grouped in segments. These segments of long lived data can then be skipped over to avoid copying the same data over and over again.

Segment Cleaning

The process of copying live data out of segments is called Segment Cleaning and basically takes three steps: Read a number of segments into memory, identify the live data blocks and write the back to a smaller number of clean segments. In order to identify live data blocks when cleaning segments SpriteLFS writes additional data to the log, the Segment Summary Block and the Segment Usage Table Block.

The segment summary block identifies all data that is written to the segment in question. For a data block, for example, the segment summary block contains the associated inode number and the offset of the data block within the file aswell as a file-version number that is also stored in the inode. Comparing this version number from the segment summary block with the one stored in the inode indicates if the data block is still in use.

The segment usage table records how many bytes are still live in a segment and the most recent modification time of any block in the segment. Just like the inode map the segment usage table is kept in memory and all segment usage table blocks are referenced from the checkpoint region.

Although this information imposes some overhead when writing the data it is useful not only for the cleaning process but also for crash recovery. Another big advantage is that, in contrast to other filesystems, there is no central data structure that stores free blocks. This saves memory, disk capacity and greatly reduces the complexity of filesystem code and crash recovery.

Crash Recovery

A position in the log where the filesystem is in a consistent state is called Checkpoint in LFS. The checkpoint is stored in a fixed position on disk, the Checkpoint Region. It contains addresses of all inode map blocks and segment usage table blocks, a pointer to the last segment written and a timestamp. The checkpoint region is updated periodically or after a given amount of data has been written. In event of a system crash the checkpoint region is read during reboot and used to initialize the data structures. This is sufficient to bring the filesystem into a consistent state.