OSLR (Optimized Link-State Routing)

Potential causes for packet loss in RoofNet

Many of the existing routing algorithms rely on the assumption, that the neighbor abstraction holds. This is certainly true as far as wired networks are concerned. In the research community there is no consensus on whether or not it is also true for wireless networks.

If it were true, it would imply, that an arbitrary pair of nodes is either able to communicate with each other or not at all. We therefore could expect the loss rates to be either very low or very high depending on the concerned node pair.

Without claiming general validity for all kinds of wireless networks, the experiments show, that the neighbor abstraction does not hold for RoofNet. One of the experiments revealed a surprising pattern of loss rates for this particular network. Appearently in RoofNet intermediate loss rates are the common case. As the graphic below shows, the majority of the nodes, that can communicate, exhibit intermediate loss rates, designating the nodes with very high and very low loss rates to be the marginal cases.

|

The question arising from this result is how this particular pattern can be explained. While searching for the answers to this question the researchers investigated among others the following hypotheses as potential causes for this fact:

- The effects of node proximity

- The effects of signal-to-noise ratio

- The effects of transmit bitrate

- The effects of multi-path fading

In the following sections a brief summary of the results for each hypothesis will be given.

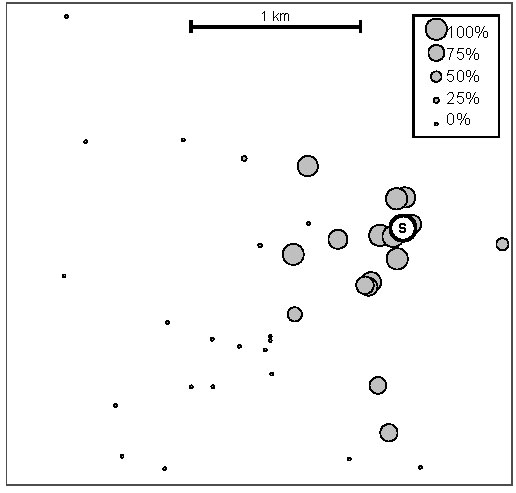

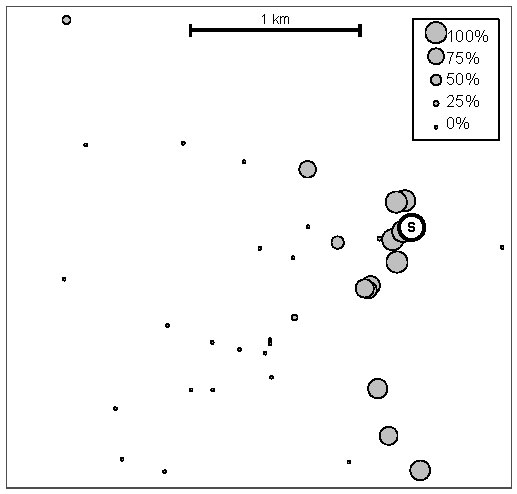

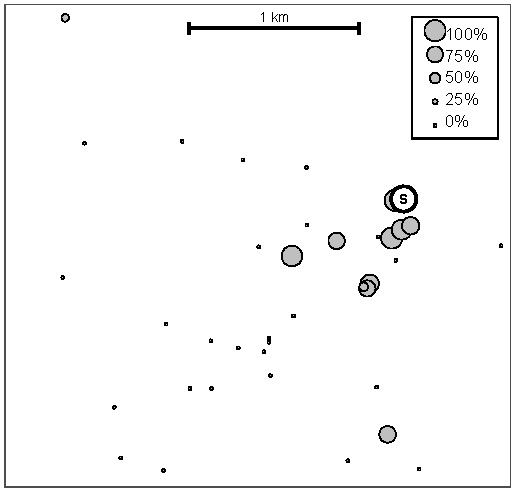

Effects of node proximity on loss rates

Trying to explain the loss rate pattern as a function of node distance at the first glance seems to be a very promising approach. But the data obtained from the experiments shows that node proximity is a very weak predictor for loss rates. The following three graphics strengthen the results. They show the delivery probabilities of three sender nodes to all other RoofNet nodes. The bigger the diameter of a circle is, the bigger is the delivery probability to the corresponding RoofNet node.

|

|

| |

For inter node distance to be the explaination for the distribution of the loss rates, there should be a correlation between the distance and the resulting delivery probability. One could expect the recieved signal to be consistently weaker, the bigger the distance to the source node is.

The surprising fact is, that this is not the case. Inter node distance certainly determines the delivery probability to some extent, but the correlation is not consistent. As the graphics show, even very small variations of the sender position have tremendous effects on the resulting pattern of delivery probabilities. If node proximity was the decisive factor, we would certainly see a stronger correlation.

The effect of signal-to-noise ratio

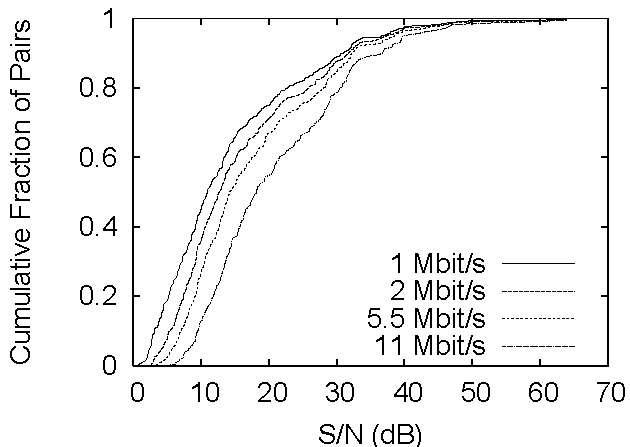

The predominance of intermediate loss rates could be due to marginal signal-to-noise values for the majority of the nodes. The 802.11 cards used in RoofNet are based on the Prism Chipset. According to the Prism specification the range of signal-to-noise values, which result in a packet error rate between 10\% and 90\%, is 3db wide.

In order to blame the intermediate delivery probabilities on the signal-to-noise ratio, it should be possible to find most of the node pairs in RoofNet in this narrow range.

But again the experiments reveal, that reality looks different. As the following graphic shows, the range of signal-to-noise ratios is much wider than expected.

|

The graphic implies, that signal-to-noise ratio is not a decisive factor for the distribution of loss rates.

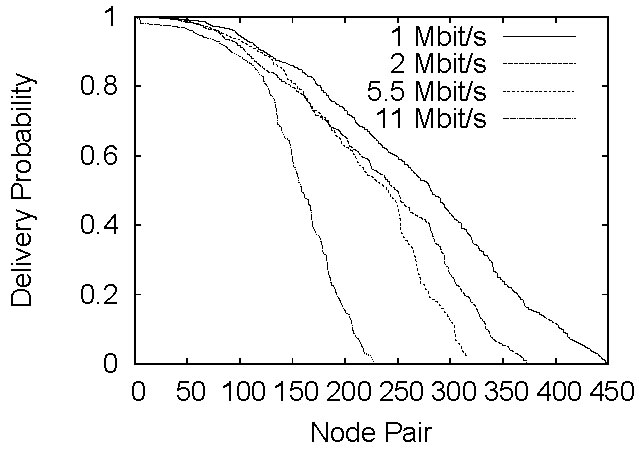

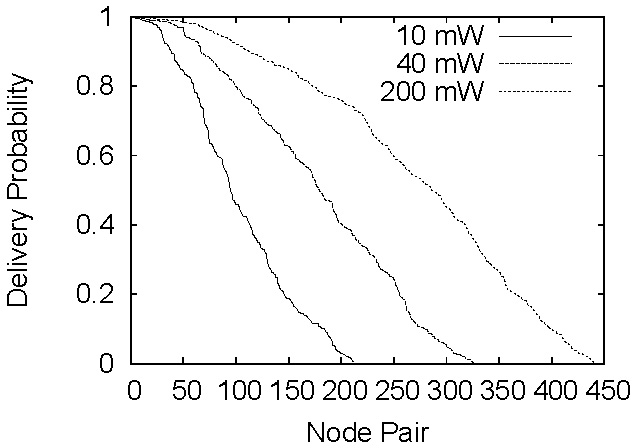

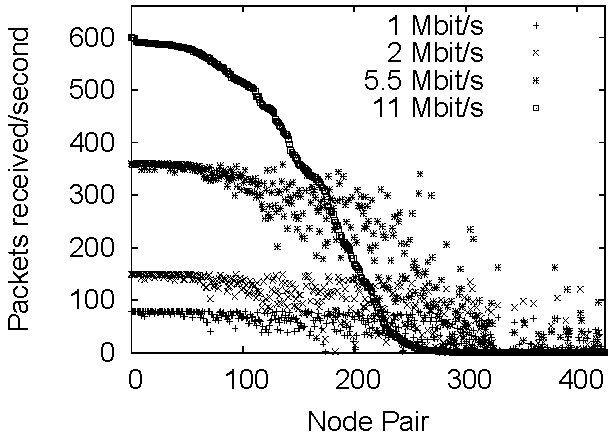

The effect of transmit bitrate

As far as transmit bitrate is concerned, there are a couple of interesting implications, that can be drawn from the experiments. These experiments show, that the transmit bitrate that provides optimum performance in terms of throughput, is not characterized by the minimum loss rate. On the contrary, the optimum bitrate of 11 Mbps exhibits a significant loss rate. The results of a corresponding experiment are visualised in the following figures.

|

|

These results suggest to change the policy of bitrate selection algorithms: Instead of reducing the bitrate immediately, when transmission errors occur, a bitrate selection algorithm for wireless networks should wait, because despite of the errors and the nessecary retransmissions a higher bitrate performs significantly better than a lower one at its optimum.

The effect of multi-path fading

The fact, that so many node pairs have intermediate delivery probabilities in RoofNet, is probably due to the phenomenon of multi-path fading. From all of the experiments, that were performed in this context, the one investigating the effect of multi-path fading led by far to the strongest results.

The challenge, that multi-path-fading poses to the manufacturers of 802.11 cards is illustrated in the following figure.