ChunkCast: An Anycast Service for Large Content Distribution: Difference between revisions

→Problems of the existing approaches: Corrected the inter-paragraph spacing |

|||

| (One intermediate revision by the same user not shown) | |||

| Line 103: | Line 103: | ||

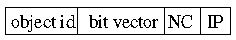

As for the implementation of the object level query, the concept of a bit vector is used. The bit vector as a whole represents the object, while each bit in this vector stands for a particular chunk of the object. In a publish message, the set bits in the vector represent the owned chunks. But the publish message itself has to bear more information than just the chunks the peer owns. It also contains an object identifier, which is the SHA-1 hash of the object name. The locality information is represented by so called Network coordinates, which provide a possibility to estimate the distance betwenn two peers without having to use pings. A third information, the publish message has to conain is of course the IP address of the publishing peer. |

As for the implementation of the object level query, the concept of a bit vector is used. The bit vector as a whole represents the object, while each bit in this vector stands for a particular chunk of the object. In a publish message, the set bits in the vector represent the owned chunks. But the publish message itself has to bear more information than just the chunks the peer owns. It also contains an object identifier, which is the SHA-1 hash of the object name. The locality information is represented by so called Network coordinates, which provide a possibility to estimate the distance betwenn two peers without having to use pings. A third information, the publish message has to conain is of course the IP address of the publishing peer. |

||

The publish message format is shown in the figure below. |

The publish message format is shown in the figure below. |

||

| Line 113: | Line 112: | ||

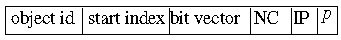

To search for data, a client peer has to issue a lookup message. The format of this message looks similar to the one of the publish message. It is shown in the following figure: |

To search for data, a client peer has to issue a lookup message. The format of this message looks similar to the one of the publish message. It is shown in the following figure: |

||

{|width="100%" border="0" |

{|width="100%" border="0" |

||

| Line 122: | Line 120: | ||

The start index, is used to allow a compact representaiton of the message. An object can potentially be partitioned into many chunks, resulting in a long bit vector. If an object consists of <math>n</math> chunks, and the peer does only need the last three chunks, the start index can be set to <math>n-3</math>, resulting in a bit vector of the length 3 (as opposed to <math>n</math>). |

The start index, is used to allow a compact representaiton of the message. An object can potentially be partitioned into many chunks, resulting in a long bit vector. If an object consists of <math>n</math> chunks, and the peer does only need the last three chunks, the start index can be set to <math>n-3</math>, resulting in a bit vector of the length 3 (as opposed to <math>n</math>). |

||

The last field <math>p</math>, indicates the number of parallel connections, that the client peer can accept, determining an upper bound for the size of the response list. |

The last field <math>p</math>, indicates the number of parallel connections, that the client peer can accept, determining an upper bound for the size of the response list. |

||

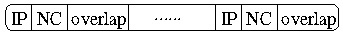

The last message format sketched here is the response message format. It is shown in the following figure: |

The last message format sketched here is the response message format. It is shown in the following figure: |

||

{|width="100%" border="0" |

{|width="100%" border="0" |

||

| Line 133: | Line 128: | ||

|- |

|- |

||

|} |

|} |

||

ChunkCast responds with the IP addresses of the peers, that posess any of the needed chunks. These peers passed the evaluation by two ranking functions. One of the functions optimizes the bit overlap, meaning that it compares the bit vector in the lookup message with the bit vectors from the publish messages for this paritcular object. The more bits correspond here, the better the ranking is. An information on how many relevant chunks a given peer holds is then included in the response message in the field ''overlap''. |

|||

The second function rates the distance between the peers. A feedback about this is encoded in the Network Coordinate field. |

|||

=References= |

=References= |

||

Latest revision as of 15:23, 19 July 2007

(The work on this article is still in progress. The current state shall not be regarded as final.)

Motivation

The idea of exchanging large amounts of data like video or audio files via the internet is not a new one. To allow this, so called Content Distribution Networks (CDNs) have been designed. The goal of these networks is to provide exactly the described functionality. Still, the efficient implementation of a CDN remains a challange.

A major problem to be dealt with when it comes to designing a CDN is the overhead, that is imposed by the user queries. The general structure of a CDN will be described later in this article. At this point it is important to see, that we generally want to keep this overhead small to provide as much bandwidth as possible for the actual upload and download activities.

Minimizing this overhead will result in better performance of the CDN in terms of the download time.

The amount of bandwidth, consumed by the user queries is determined by a number of factors, but particularly by the structure of the CDN. We will therefore have to take a closer look at the structure to propose any improvements.

The general structure of a CDN

CDNs are basically peer-to-peer networks, meaning that the structure is a rather loose and unplanned one with peers constantly joining and leaving the network. In contrast to the Client-Server model, where the roles of the nodes in the network are set, a peer can act as a client, as a server, or even simultaniously as both depending on the situation.

The files circulated in the CDN are called objects. Typically each object is partitioned into a number of chunks. The chunks in turn are striped between the peers in the network.

In general, a CDN works as follows:

- A client peer wanting to download an object issues a lookup message for each chunk of this object.

- A directory storing the mappings between chunks and peers issues a response message containig all the peers, that store the concerned chunk.

- The client peer chooses a server peer from the obtained list and initiates the download.

- When the download completes, the client peer advertises itself in the directory as an owner of the chunk.

Now that we know the general structure of a CDN, we can perform a critical analysis of the existing implementations of CDNs.

Problems of the existing approaches

Identifiing the main problems and bottlenecks of the existing implementations, is a second crucial step on the way to designing an improved CDN. The challenges are:

- The number of queries can be huge, so performance problems are going to be an issue

- The general structure, as described above, ignores two important aspects:

- the peer selection (which peers should be regarded as optimal)

- the chunk selection (in which order should the chunks be downloaded)

Consequently two major drawbacks can be stated:

- the structure ignores the effects of locality

- because all queries are directed towards a central directory, and each query relates to only one chunk, the approach is not scalable

The following secitons concentrate on these drawbacks. There will be suggestions on how to tackle them. These suggestions will be presented using the example of ChunkCast.

Efficient queries

A model for an ideal CDN would have the following properties:

- When a client peer issues a lookup message, the resulting server peers should:

- be located nearby in terms of network latency

- provide a high maximum bandwidth

- provide a high available bandwidth

- Only one peer in a given area downloads a particular remote chunk. If another peer in this area should also need this chunk, the download takes place locally.

Up to now, the existing implementations of CDNs satisfy only a subset of the desired properties. Very roughly they can be organized into two groups:

- Downloading the chunks from a small set of peers A lookup query results in a small list of server peers, that own a particular chunk. The problem here is, that this approach potentially wastes bandwidth, if nearby server peers exist, that posses the requested chunk, but were not included in the list.

- Downloading the chunks from an optimal peer As in the previous case, a chunk lookup query results in a list of server peers possessing the particular chunk. The client peer then selects an optimal peer rated by some rating function.

The problem with this approach is, that the aspect of locality is not taken into account. It is possible, that two nearby client peers download the same chunk from remote peers.

Obviously what is needed here is a solution that:

- Integrates locality information to improve the results of lookup queries

- gives guidance on the order, in which the chunks should be downloaded

As noted earlier in this article, we need an approach that respects both the peer selection and the chunk selection rather than to concentrate on only one of these criteria.

To achieve this, a global view on the chunks in the directory is needed. A view that on the one hand carries information about the set of chunks, that are currently residing in the directory. On the other hand a client peer should get a hint about the distance to any server peer holding a relevant chunk.

The lookup query used in ChunkCast--which will be described next--has these properties, because it results in a list of server peers that satisfy the following conditions:

- they are the closest possible peers

- the list contains peers, that hold any one of the chunks the client peer might need

ChunkCast

ChunkCast is an innovative anycast service, that was developed and introduced by a group of researchers from the university of Berkeley, California. The advantage of ChunkCast is, that it allows a speedup of ca. 32\% in terms of the median download time. This is achieved due to a redesign of the publish and lookup queries, which will be described below.

The general idea is, that peers, in a peer-to-peer network use the ChunkCast service for two purposes:

- The chunk lookup

- The chunk publication

The actual data download is carried out between the peers and ChunkCast does not interfere here. The following figure illustrates this approach.

|

ChunkCast provides an interface to the peers. It uses a distributed indexing service, that intergrates locality information. The benefit of this approach is, that it is possible to explicitly guide the peers in the processes of peer- and chunk selection.

ChunkCast is build on top of a structured overlay and therefore inherits many advantages regarding the routing of messages in a decentralized network. The novel property of ChunkCast is, that chunks are represented on the object level. Thus an object is represented as the sum of its chunks.

This object-level representation has the advantage, that the indexing trees which are used to provide the routing information in the network, are constructed per object and not per chunk, as it was the case in the previous approaches.

The next section provides a brief overview of the message formats used in ChunkCast.

Message formats in ChunkCast

As for the implementation of the object level query, the concept of a bit vector is used. The bit vector as a whole represents the object, while each bit in this vector stands for a particular chunk of the object. In a publish message, the set bits in the vector represent the owned chunks. But the publish message itself has to bear more information than just the chunks the peer owns. It also contains an object identifier, which is the SHA-1 hash of the object name. The locality information is represented by so called Network coordinates, which provide a possibility to estimate the distance betwenn two peers without having to use pings. A third information, the publish message has to conain is of course the IP address of the publishing peer. The publish message format is shown in the figure below.

To search for data, a client peer has to issue a lookup message. The format of this message looks similar to the one of the publish message. It is shown in the following figure:

The start index, is used to allow a compact representaiton of the message. An object can potentially be partitioned into many chunks, resulting in a long bit vector. If an object consists of chunks, and the peer does only need the last three chunks, the start index can be set to , resulting in a bit vector of the length 3 (as opposed to ). The last field , indicates the number of parallel connections, that the client peer can accept, determining an upper bound for the size of the response list. The last message format sketched here is the response message format. It is shown in the following figure:

ChunkCast responds with the IP addresses of the peers, that posess any of the needed chunks. These peers passed the evaluation by two ranking functions. One of the functions optimizes the bit overlap, meaning that it compares the bit vector in the lookup message with the bit vectors from the publish messages for this paritcular object. The more bits correspond here, the better the ranking is. An information on how many relevant chunks a given peer holds is then included in the response message in the field overlap.

The second function rates the distance between the peers. A feedback about this is encoded in the Network Coordinate field.