Tls handshake schnell sicher: Difference between revisions

| (29 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == |

== Introduction == |

||

When we communicate on the Internet, we want to be sure to know who we are interact with. By default, the most protocols do not ensure that the services you are communicating with authenticate themselves or protect your messages from eavesdropping. |

|||

To ensure authenticity and privacy, TLS can be used. |

|||

TLS is a cryptographic protocol used to encrypt data transfer between communication partners. It is located in the application layer of the Internet Protocol Suite (IP Suite) or in the presentation layer of the OSI model. It is typically used as a wrapper for other (insecure) protocols like HTTP or SMTP. Besides encryption TLS could be used for identification purposes of both communication partners. There are several versions of TLS with versions below 1.2 being deprecated. The current versions 1.2[https://datatracker.ietf.org/doc/html/rfc5246] and 1.3[https://datatracker.ietf.org/doc/html/rfc8446] dropped support of unsecure hash functions like MD5 or SHA224. An unconfigured v1.3 connection defaults to the cipher suite AES256_GCM_SHA384. |

TLS is a cryptographic protocol used to encrypt data transfer between communication partners. It is located in the application layer of the Internet Protocol Suite (IP Suite) or in the presentation layer of the OSI model. It is typically used as a wrapper for other (insecure) protocols like HTTP or SMTP. Besides encryption TLS could be used for identification purposes of both communication partners. There are several versions of TLS with versions below 1.2 being deprecated. The current versions 1.2[https://datatracker.ietf.org/doc/html/rfc5246] and 1.3[https://datatracker.ietf.org/doc/html/rfc8446] dropped support of unsecure hash functions like MD5 or SHA224. An unconfigured v1.3 connection defaults to the cipher suite AES256_GCM_SHA384. |

||

| Line 12: | Line 14: | ||

# Server-Certificate-Exchange: Server sends its certificate along with certificate chain (also sends valid OCSP-response if OCSP-stapling is configured) |

# Server-Certificate-Exchange: Server sends its certificate along with certificate chain (also sends valid OCSP-response if OCSP-stapling is configured) |

||

# Client-Certificate-Exchange: Client acknowledges validity of certificate |

# Client-Certificate-Exchange: Client acknowledges validity of certificate |

||

# Session-Ticket: Client generates session ticket using |

# Session-Ticket: Client generates session ticket using one of the following methods: |

||

#:- random data |

#:- random data from Client encrypted with public key of the Server, sent to Server, both parties build Session Ticket with random data from both Hello |

||

#:- random key via Diffie-Hellmann-Key-Exchange |

#:- random key via Diffie-Hellmann-Key-Exchange |

||

In v1.3 this was revised to speed up the handshake, it now is as follows: |

In v1.3 this was revised to speed up the handshake, it now is as follows: |

||

| Line 28: | Line 30: | ||

A comparison of a openssl-speedtest with and without usage of CPU instructions for AES as well as for ChaCha20 on a Intel Xeon Gold 6150 yields the following results, the OPENSSL_ia32cap[https://docs.openssl.org/3.0/man3/OPENSSL_ia32cap/] was used to disable the AES or AVX-512 CPU-instructions for testing: |

A comparison of a openssl-speedtest with and without usage of CPU instructions for AES as well as for ChaCha20 on a Intel Xeon Gold 6150 yields the following results, the OPENSSL_ia32cap[https://docs.openssl.org/3.0/man3/OPENSSL_ia32cap/] was used to disable the AES or AVX-512 CPU-instructions for testing: |

||

<syntaxhighlight lang="bash"> |

|||

$ OPENSSL_ia32cap="~0x200000200000000" openssl speed -elapsed -aead -evp aes-256-gcm |

|||

$ openssl speed -elapsed -aead -evp aes-256-gcm |

[1] $ OPENSSL_ia32cap="~0x200000200000000" openssl speed -elapsed -aead -evp aes-256-gcm |

||

$ |

[2] $ openssl speed -elapsed -aead -evp aes-256-gcm |

||

$ openssl speed -elapsed -aead -evp chacha20-poly1305 |

[3] $ OPENSSL_ia32cap="0x7ffaf3ffffebffff:0x27ab" openssl speed -elapsed -aead -evp chacha20-poly1305 |

||

[4] $ openssl speed -elapsed -aead -evp chacha20-poly1305 |

|||

type 2 bytes 31 bytes 136 bytes 1024 bytes 8192 bytes 16384 bytes |

|||

</syntaxhighlight> |

|||

AES-256-GCM 3912.58k 43681.83k 119433.57k 220805.46k 240091.14k 241401.86k |

|||

AES-256-GCM 12352.14k 156771.30k 600536.56k 2364060.33k 3829205.67k 4008596.82k |

|||

ChaCha20-Poly1305 4561.57k 67435.59k 185287.58k 472737.11k 557932.54k 565346.30k |

|||

ChaCha20-Poly1305 5406.06k 79034.59k 256344.41k 1439373.99k 2491817.98k 2634612.74k |

|||

{| class="wikitable" |

|||

Other factors that may lead to a performance gain in Unixoid operating systems is kernel TLS (kTLS)[https://docs.kernel.org/networking/tls.html#kernel-tls][https://docs.kernel.org/networking/tls-offload.html] wherein the TLS-workflow is completely offloaded from the userspace program to kernelspace functions. The kernel used has to support this, it is typically available since Linux kernel version 5.1 (AES-256-GCM) or 5.11 (CHACHA20-POLY1305) since FreeBSD 13.0[https://delthas.fr/blog/2023/kernel-tls/] and can be queried via the following command |

|||

|+ measured throughput per second |

|||

|- |

|||

! !! type !! 2 bytes !! 31 bytes !! 136 bytes !! 1024 bytes !! 8192 bytes !! 16384 bytes |

|||

|- |

|||

| 1 || AES-256-GCM || style="text-align:right;" | 3912.58k || style="text-align:right;" | 43681.83k || style="text-align:right;" | 119433.57k || style="text-align:right;" | 220805.46k || style="text-align:right;" | 240091.14k || style="text-align:right;" | 241401.86k |

|||

|- |

|||

| 2 || AES-256-GCM || style="text-align:right;" | 12352.14k || style="text-align:right;" | 156771.30k || style="text-align:right;" | 600536.56k || style="text-align:right;" | 2364060.33k || style="text-align:right;" | 3829205.67k || style="text-align:right;" | 4008596.82k |

|||

|- |

|||

| 3 || ChaCha20-Poly1305 || style="text-align:right;" | 4561.57k || style="text-align:right;" | 67435.59k || style="text-align:right;" | 185287.58k || style="text-align:right;" | 472737.11k || style="text-align:right;" | 557932.54k || style="text-align:right;" | 565346.30k |

|||

|- |

|||

| 4 || ChaCha20-Poly1305 || style="text-align:right;" | 5406.06k || style="text-align:right;" | 79034.59k || style="text-align:right;" | 256344.41k || style="text-align:right;" | 1439373.99k || style="text-align:right;" | 2491817.98k || style="text-align:right;" | 2634612.74k |

|||

|} |

|||

Other factors that may lead to a performance gain in Unixoid operating systems is kernel TLS (kTLS)[https://docs.kernel.org/networking/tls.html#kernel-tls][https://docs.kernel.org/networking/tls-offload.html] wherein the TLS-workflow is completely offloaded from the userspace program to kernelspace functions. The kernel used has to support this, it is typically available since Linux kernel version 5.1 (AES-256-GCM) or 5.11 (CHACHA20-POLY1305), or since FreeBSD 13.0[https://delthas.fr/blog/2023/kernel-tls/] and can be queried via the following command |

|||

<syntaxhighlight lang="bash"> |

|||

$ lsmod|grep tls |

$ lsmod|grep tls |

||

tls 151552 0 |

tls 151552 0 |

||

</syntaxhighlight> |

|||

Furthermore the application also must have support for kTLS, either built in or by using external libraries such as openssl. |

Furthermore the application also must have support for kTLS, either built in or by using external libraries such as openssl. |

||

Definite usage by programms is tracked via the following counters, wherein TlsTxSw means 91967 served connections used the kTLS-function, but nothing uses it at the moment of querying. |

Definite usage by programms is tracked via the following counters, wherein TlsTxSw means 91967 served connections used the kTLS-function, but nothing uses it at the moment of querying. |

||

<syntaxhighlight lang="bash"> |

|||

$ cat /proc/net/tls_stat |

$ cat /proc/net/tls_stat |

||

TlsCurrTxSw 0 |

TlsCurrTxSw 0 |

||

| Line 56: | Line 72: | ||

TlsDecryptRetry 0 |

TlsDecryptRetry 0 |

||

TlsRxNoPadViolation 0 |

TlsRxNoPadViolation 0 |

||

</syntaxhighlight> |

|||

Since the linux kernel 4.13 it is possible to offload TLS to the kernel level (known as kTLS). This should lead to better performance because it saves context switches between the user level TLS implementation and the network stack. |

Since the linux kernel 4.13 it is possible to offload TLS to the kernel level (known as kTLS). This should lead to better performance because it saves context switches between the user level TLS implementation and the network stack. |

||

| Line 79: | Line 95: | ||

File:Raspi_speed_log.png|Raspberry Pi 4 |

File:Raspi_speed_log.png|Raspberry Pi 4 |

||

</gallery> |

</gallery> |

||

=== AES === |

|||

AES[https://nvlpubs.nist.gov/nistpubs/fips/nist.fips.197.pdf] is a family of block ciphers with a block size of 128 bits and three different key sizes of 128 bits, 192 bits, and 256 bits. It was introduced in 2001 replacing the DES[https://csrc.nist.gov/files/pubs/fips/46-3/final/docs/fips46-3.pdf] following proven attack vectors against DES. Being a block cipher in AES the data to be encrypted is chunked into blocks of 128 bits in size. Then at its core it uses substitution-permutation network with a fixed number of rounds depending on the key-size, i.e. 10, 12, 14 for 128 bits, 192 bits, 256, respectively. Further in depth description of AES is beyond the scope of this article, please refer to the NIST-documentation or open source implementations like in openssl. |

|||

Due to the large number of logical operations and mathematical operations encryption can exhibit low performance on lower end CPUs, but hardware implementations either with CPU instructions or offloaded via accompanying ASICs are used to speed up AES yielding good results, please refer to the speed tests in the previous section. |

|||

{| class="wikitable" |

|||

|+ Top-10 instructions of chacha20-poly1305 of 2 bytes for 1 s |

|||

|- |

|||

| colspan="2" style="text-align:center;" | Pentium 4 Prescott || colspan="2" style="text-align:center;" | Haswell || colspan="2" style="text-align:center;" | Skylake-X |

|||

|- |

|||

| colspan="2" style="text-align:right;" | 851 * 10^6 instr || colspan="2" style="text-align:right;" | 344 * 10^6 instr || colspan="2" style="text-align:right;" | 322 * 10^6 instr |

|||

|- |

|||

! Instruction !! share !! Instruction !! share !! Instruction !! share |

|||

|- |

|||

| AND_GPRv_IMMz || 0.0291 |

|||

| ADD_GPRv_IMMb || 0.0293 |

|||

| ADD_GPRv_IMMb || 0.0298 |

|||

|- |

|||

| LEA_GPRv_AGEN || 0.03 |

|||

| RET_NEAR || 0.0298 |

|||

| RET_NEAR || 0.0303 |

|||

|- |

|||

| XOR_GPRv_MEMv || 0.0428 |

|||

| LEA_GPRv_AGEN || 0.0328 |

|||

| LEA_GPRv_AGEN || 0.0334 |

|||

|- |

|||

| MOV_GPRv_MEMv || 0.0491 |

|||

| JNZ_RELBRb || 0.0373 |

|||

| JNZ_RELBRb || 0.0379 |

|||

|- |

|||

| SHR_GPRv_IMMb || 0.0608 |

|||

| JZ_RELBRb || 0.0451 |

|||

| JZ_RELBRb || 0.0459 |

|||

|- |

|||

| SHL_GPRv_IMMb_C1r4 || 0.061 |

|||

| POP_GPRv_58 || 0.0513 |

|||

| POP_GPRv_58 || 0.0522 |

|||

|- |

|||

| MOVZX_GPRv_GPR8 || 0.0667 |

|||

| PUSH_GPRv_50 || 0.0514 |

|||

| PUSH_GPRv_50 || 0.0522 |

|||

|- |

|||

| MOVZX_GPRv_MEMb || 0.0731 |

|||

| MOV_GPRv_MEMv || 0.0638 |

|||

| MOV_GPRv_MEMv || 0.065 |

|||

|- |

|||

| MOV_GPRv_GPRv_89 || 0.0781 |

|||

| TEST_GPRv_GPRv || 0.0712 |

|||

| TEST_GPRv_GPRv || 0.0724 |

|||

|- |

|||

| XOR_GPRv_GPRv_31 || 0.143 |

|||

| MOV_GPRv_GPRv_89 || 0.1026 |

|||

| MOV_GPRv_GPRv_89 || 0.1043 |

|||

|- |

|||

|} |

|||

Not in the table are the instructions <code>AESENCLAST_XMMdq_XMMdq</code> and <code>AESENC_XMMdq_XMMdq</code> with an instruction share of 0.09 % and 1.14 %, respectively. While not that much instructions overall the decreased instruction count for the speedtest run can be clearly seen, resulting in the previously mentioned performance gain when using CPU instructions for AES. |

|||

=== ChaCha20 === |

|||

The ChaCha20 stream cipher[https://en.wikipedia.org/wiki/Salsa20#ChaCha_variant] uses a set number of rounds of ADD and ROT on numbers in a 4x4 matrix. |

|||

Therefore vector instructions in CPU can be leveraged to gain a performance boost, see previous section for measured values. Measurement runs with the Intel sde[https://www.intel.com/content/www/us/en/developer/articles/tool/software-development-emulator.html] revealed the usage of vector CPU-instructions. |

|||

{| class="wikitable" |

|||

|+ Top-10 instructions of chacha20-poly1305 of 2 bytes for 1 s |

|||

|- |

|||

| colspan="2" style="text-align:center;" | Pentium 4 Prescott || colspan="2" style="text-align:center;" | Haswell || colspan="2" style="text-align:center;" | Skylake-X |

|||

|- |

|||

| colspan="2" style="text-align:right;" | 591 * 10^6 instr || colspan="2" style="text-align:right;" | 467 * 10^6 instr || colspan="2" style="text-align:right;" | 409 * 10^6 instr |

|||

|- |

|||

! Instruction !! share !! Instruction !! share !! Instruction !! share |

|||

|- |

|||

| LEA_GPRv_AGEN || 0.03 |

|||

| PSHUFD_XMMdq_XMMdq_IMMb || 0.0317 |

|||

| LEA_GPRv_AGEN || 0.0395 |

|||

|- |

|||

| POP_GPRv_58 || 0.0307 |

|||

| LEA_GPRv_AGEN || 0.0363 |

|||

| MOV_MEMv_GPRv || 0.0398 |

|||

|- |

|||

| TEST_GPRv_GPRv || 0.0319 |

|||

| MOV_MEMv_GPRv || 0.0366 |

|||

| POP_GPRv_58 || 0.0455 |

|||

|- |

|||

| PUSH_GPRv_50 || 0.0365 |

|||

| POP_GPRv_58 || 0.0417 |

|||

| VPADDD_YMMqq_YMMqq_YMMqq || 0.046 |

|||

|- |

|||

| MOV_MEMv_GPRv || 0.0489 |

|||

| PADDD_XMMdq_XMMdq || 0.0423 |

|||

| VPROLD_YMMu32_MASKmskw_YMMu32_IMM8_AVX512 || 0.046 |

|||

|- |

|||

| MOV_GPRv_MEMv || 0.0629 |

|||

| TEST_GPRv_GPRv || 0.0434 |

|||

| VPXOR_YMMqq_YMMqq_YMMqq || 0.046 |

|||

|- |

|||

| MOV_GPRv_GPRv_89 || 0.0677 |

|||

| PXOR_XMMdq_XMMdq || 0.0452 |

|||

| TEST_GPRv_GPRv || 0.0473 |

|||

|- |

|||

| ROL_GPRv_IMMb || 0.124 |

|||

| PUSH_GPRv_50 || 0.0466 |

|||

| PUSH_GPRv_50 || 0.0507 |

|||

|- |

|||

| ADD_GPRv_GPRv_01 || 0.1295 |

|||

| MOV_GPRv_MEMv || 0.0568 |

|||

| MOV_GPRv_MEMv || 0.0619 |

|||

|- |

|||

| XOR_GPRv_GPRv_31 || 0.1345 |

|||

| MOV_GPRv_GPRv_89 || 0.091 |

|||

| MOV_GPRv_GPRv_89 || 0.0992 |

|||

|- |

|||

|} |

|||

== how to: fast and safe SSL handshake == |

== how to: fast and safe SSL handshake == |

||

=== OCSP === |

|||

* OCSP is not supported anymore by [https://letsencrypt.org/2024/07/23/replacing-ocsp-with-crls.html Let's Encypt] due to privacy concerns and extensive resource requirements |

|||

The Online Certificate Status Protocol (OCSP) will not be supported by [https://letsencrypt.org/2024/07/23/replacing-ocsp-with-crls.html Let's Encypt] in future. The stated reason are privacy concerns and extensive resource requirements for providing this service. Theoretically the privacy concerns are solved by using OCSP Stapling. |

|||

* But Chromium derivates do not use OCSP/CRL by default, anyways |

|||

Nevertheless OCSP and OCSP Stapling will not be available to website providers using letsencrypt certificates soon. |

|||

* only use TLSv1.2 and newer with recommended ciphers from the BSI [https://www.bsi.bund.de/SharedDocs/Downloads/DE/BSI/Publikationen/TechnischeRichtlinien/TR02102/BSI-TR-02102.html][https://www.bsi.bund.de/SharedDocs/Downloads/DE/BSI/Publikationen/TechnischeRichtlinien/TR02102/BSI-TR-02102-2.html] |

|||

* use elliptic curve for keys |

|||

* if possible, use SSL offloading to hardware |

|||

* kTLS doesn't seem to make a measurable improvement |

|||

Regardless of this Chromium derivates do not use OCSP/CRL by default anyways. |

|||

==== for those, who are interested ==== |

|||

=== Ciphers === |

|||

Nowadays only TLSv1.2 and newer should be used. If backwards compatibility is not necessary, it is even better to offer only TLSv1.3. |

|||

It is recommended to only use the recommended ciphers from the BSI [https://www.bsi.bund.de/SharedDocs/Downloads/DE/BSI/Publikationen/TechnischeRichtlinien/TR02102/BSI-TR-02102.html][https://www.bsi.bund.de/SharedDocs/Downloads/DE/BSI/Publikationen/TechnischeRichtlinien/TR02102/BSI-TR-02102-2.html]. |

|||

Additionally we would recommend only ChaCha20 with an adequate key length due to the performance increase on devices without hardware acceleration for AES. |

|||

In general, it is also preferable to switch to elliptic curves, e.g. using NIST-p256 curve, for keys because this also leads to a measurable impact [[Tls_handshake_schnell_sicher#Performance_considerations | measurable impact]]. |

|||

It is also possible to gain performance improvements, just by configuring the server so that all parts work optimally together. |

|||

Most servers load at first the served data into the RAM before delivering. |

|||

These memory operations cost time, so it is reasonable to enable the use of `sendfile()`. |

|||

Be aware that using the `sendfile` mechanism does not work well, when serving files from network shares among [https://httpd.apache.org/docs/current/en/mod/core.html#enablesendfile other issues]. |

|||

This allows servers not to intermediate buffer the data and therefore improves performance. |

|||

If possible, offload the TLS stack from the userspace (your server software). |

|||

A option is to offload the TLS-handling to linux kernel. We do not recommend it because it did [[Tls_handshake_schnell_sicher#Performance_considerations | not lead to speed improvements ]]. |

|||

In the case that a TLS-aware [https://www.nvidia.com/en-us/networking/ethernet/connectx-6-dx/ network card] is available, it seems best to offload the TLS handling to the dedicated hardware. |

|||

In general, it is preferable to provide users with the shortest, but complete key chain for your certificate to save time during verification. |

|||

=== Exemplary configuarition === |

|||

A fast and secure configuration for nginx, assuming small setups, could look like this: |

|||

==== nginx ==== |

|||

<syntaxhighlight lang="unixconfig"> |

|||

# For more information on configuration, see: |

|||

# * Official English Documentation: http://nginx.org/en/docs/ |

|||

# * Official Russian Documentation: http://nginx.org/ru/docs/ |

|||

user nginx; |

|||

error_log /var/log/nginx/error.log; |

|||

pid /run/nginx.pid; |

|||

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic. |

|||

include /usr/share/nginx/modules/*.conf; |

|||

events { |

|||

worker_connections 1024; |

|||

} |

|||

http { |

|||

access_log /var/log/nginx/access.log; |

|||

include /etc/nginx/mime.types; |

|||

default_type application/octet-stream; |

|||

# Load modular configuration files from the /etc/nginx/conf.d directory. |

|||

# See http://nginx.org/en/docs/ngx_core_module.html#include |

|||

# for more information. |

|||

include /etc/nginx/conf.d/*.conf; |

|||

server { |

|||

listen 80; |

|||

listen [::]:80; |

|||

listen 443 ssl; |

|||

listen [::]:443 ssl; |

|||

server_name _; |

|||

root /usr/share/nginx/html; |

|||

# Load configuration files for the default server block. |

|||

include /etc/nginx/default.d/*.conf; |

|||

# Prevent nginx HTTP Server Detection |

|||

server_tokens off; |

|||

ssl_certificate "[path to certificate]"; |

|||

ssl_certificate_key "[path to key]"; |

|||

ssl_session_cache shared:SSL:1m; |

|||

ssl_session_timeout 10m; |

|||

# only allow TLSv1.3 |

|||

ssl_protocols TLSv1.3; |

|||

# only allow elliptic curves (AES and ChaCha20 for mobile devices) |

|||

ssl_conf_command Ciphersuites TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256; |

|||

# reduce memcpy by using sendfile |

|||

sendfile on; |

|||

sendfile_max_chunk 1m; # Limits chunks to 1 Megabytes |

|||

# wait until the next package is full, except for the least (we save nagle's timeouts) |

|||

tcp_nopush on; |

|||

tcp_nodelay on; |

|||

keepalive_timeout 65; |

|||

types_hash_max_size 4096; |

|||

error_page 404 /404.html; |

|||

location = /404.html { |

|||

} |

|||

error_page 500 502 503 504 /50x.html; |

|||

location = /50x.html { |

|||

} |

|||

} |

|||

} |

|||

</syntaxhighlight> |

|||

=== for those, who are interested === |

|||

==== useful links ==== |

|||

There are some helpful web services when working with TLS. |

|||

* Mozilla's Configuration Generator [https://ssl-config.mozilla.org/#server=nginx&version=1.26.0&config=intermediate&openssl=1.1.1w&guideline=5.7 link] |

|||

* OpenSSL Labs SSL Server test [https://www.ssllabs.com/ssltest/index.html link] |

|||

===== Enable kTLS ===== |

===== Enable kTLS ===== |

||

At first, it is necessary to check if the corresponding kernel module is loaded on your instance, like this: |

|||

: <syntaxhighlight lang="bash" inline>$ lsmod | grep tls</syntaxhighlight> |

|||

It is also necessary, that your server software was built with kTLS support. Most times you can see the build-parameters using a commandline parameter like `-v` or `-V`. |

|||

Given that the preconditions are fulfilled, we can activate kTLS |

|||

#:- Most times, you can find it within the building parameters. |

|||

# Enable kTLS in our webserver |

|||

#:- using <code>nginx</code>: |

|||

using <code>nginx</code>: |

|||

#::- Add <code>ssl_conf_command Options KTLS;</code> to the server section. |

|||

: Add <syntaxhighlight lang="unixconfig" inline>ssl_conf_command Options KTLS;</syntaxhighlight> to the server section. |

|||

#:- Verify it by enabling debug printouts. |

|||

: Afterwards you could verify it by enabling debug printouts, so you should see lines containing <code>BIO_get_ktls_send</code> and <code>SSL_sendfile</code>. |

|||

#:- Alternatively verify by looking into <code>/proc/net/tls_stat</code>. |

|||

#:- using <code>apache2</code>: |

|||

using <code>apache2</code>: |

|||

#::- No kTLS implemented, possibly due to low impact, but |

|||

: Apache has no kTLS implementation, possibly due to the low impact. Therefore we just can activate the <code>sendfile()</code>[https://man7.org/linux/man-pages/man2/sendfile.2.html] mechanism by adding <code>EnableSendfile On</code> to your config. (see the [https://httpd.apache.org/docs/current/en/mod/core.html#enablesendfile doc]) |

|||

#:- using <code>lighttpd</code>: |

|||

#::- Add <code>ssl.openssl.ssl-conf-cmd += ("Options" => "-KTLS")</code>[https://redmine.lighttpd.net/projects/lighttpd/wiki/Docs_SSL#kTLS] |

|||

using <code>lighttpd</code>: |

|||

#:- In general, you should be able to verify the changes using a benchmarking tool like [https://httpd.apache.org/docs/2.4/programs/ab.html <code>ab</code>] or [https://github.com/httperf/httperf <code>httperf</code>]. |

|||

: Add <syntaxhighlight lang="ini" inline>ssl.openssl.ssl-conf-cmd += ("Options" => "-KTLS")</syntaxhighlight>[https://redmine.lighttpd.net/projects/lighttpd/wiki/Docs_SSL#kTLS] to the configuration. |

|||

#:- Check in <code>/proc/net/tls_stat</code> at server runtime |

|||

# Use ECC key material, e.g. using NIST-p256 curve |

|||

In general, you should be able to verify the changes using a benchmarking tool like [https://httpd.apache.org/docs/2.4/programs/ab.html <code>ab</code>] or [https://github.com/httperf/httperf <code>httperf</code>] or by taking a look into <code>/proc/net/tls_stat</code>. |

|||

# Use hardware with CPU-instructions for ciphers, e.g. AESENC/AESDEC on x86-CPUs, general vector instructions for CHACHA20, or with bespoke crypto hardware[https://en.wikipedia.org/wiki/AES_instruction_set]. |

|||

Revision as of 18:24, 28 October 2024

Introduction

When we communicate on the Internet, we want to be sure to know who we are interact with. By default, the most protocols do not ensure that the services you are communicating with authenticate themselves or protect your messages from eavesdropping. To ensure authenticity and privacy, TLS can be used. TLS is a cryptographic protocol used to encrypt data transfer between communication partners. It is located in the application layer of the Internet Protocol Suite (IP Suite) or in the presentation layer of the OSI model. It is typically used as a wrapper for other (insecure) protocols like HTTP or SMTP. Besides encryption TLS could be used for identification purposes of both communication partners. There are several versions of TLS with versions below 1.2 being deprecated. The current versions 1.2[1] and 1.3[2] dropped support of unsecure hash functions like MD5 or SHA224. An unconfigured v1.3 connection defaults to the cipher suite AES256_GCM_SHA384.

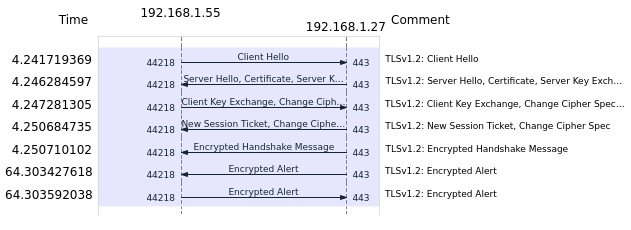

Connection Handshake

A typical v1.2 connection without client authentication is done as follows:

- Client Hello: Client initiates connection to the Server, transmitting list of supported cipher suites, possibly indicating requiring server certificate status information (OCSP-stapling via flag status_request)

- Server Hello: Server replies chosen supported cipher suite

- Server-Certificate-Exchange: Server sends its certificate along with certificate chain (also sends valid OCSP-response if OCSP-stapling is configured)

- Client-Certificate-Exchange: Client acknowledges validity of certificate

- Session-Ticket: Client generates session ticket using one of the following methods:

- - random data from Client encrypted with public key of the Server, sent to Server, both parties build Session Ticket with random data from both Hello

- - random key via Diffie-Hellmann-Key-Exchange

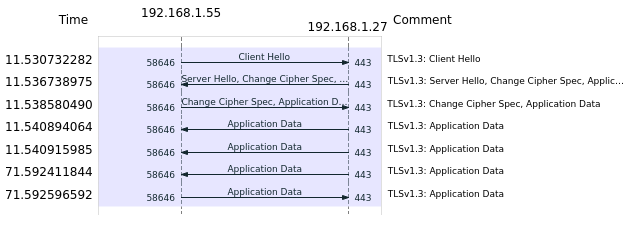

In v1.3 this was revised to speed up the handshake, it now is as follows:

- Client Hello: Client initiates Connection to the Server, sends list of supported cipher suites

- Server Hello and Change Cipher Spec:

- - If Server knows and supports one of the cipher suites, Server sends its certificate, certifcate chain, possibly OCSP-response

- - Server signals started encrypted messaging

- Client Change Cipher Spec: Client responds that it also has started encrypted messaging

Unlike version 1.2 the version 1.3 does not allow renegotiation to an unsafe lower version by a request anymore, but rather refuses to complete the handshake. Therefore a connection is not established. Checking of the server or client certificate is implementation dependent, but strongly advised, as to not establish connections with a malicious actor using a compromised certificate. This not only implies building the issuer chain up to a trusted point, but also revocation checking of the certificate in question, either by OCSP[3] (Online Certificate Status Protocol), which is the preferred option, or by CRL[4] (Certificate Revocation List]. Both methods are subject to DOS-attacks, due to this for example Chromium-based web browsers do not use either of the methods when connecting to https-addresses, but rather rely on built in CA white-/blacklists.

Performance considerations

Instead of the standard AES-256-algorithm the ChaCha20-algorithm may be used. This algorithm is typically faster than a software implementation of AES-256. However current CPUs have instructions to execute AES in hardware, which can be used by tools like openssl. A comparison of a openssl-speedtest with and without usage of CPU instructions for AES as well as for ChaCha20 on a Intel Xeon Gold 6150 yields the following results, the OPENSSL_ia32cap[5] was used to disable the AES or AVX-512 CPU-instructions for testing:

[1] $ OPENSSL_ia32cap="~0x200000200000000" openssl speed -elapsed -aead -evp aes-256-gcm

[2] $ openssl speed -elapsed -aead -evp aes-256-gcm

[3] $ OPENSSL_ia32cap="0x7ffaf3ffffebffff:0x27ab" openssl speed -elapsed -aead -evp chacha20-poly1305

[4] $ openssl speed -elapsed -aead -evp chacha20-poly1305

| type | 2 bytes | 31 bytes | 136 bytes | 1024 bytes | 8192 bytes | 16384 bytes | |

|---|---|---|---|---|---|---|---|

| 1 | AES-256-GCM | 3912.58k | 43681.83k | 119433.57k | 220805.46k | 240091.14k | 241401.86k |

| 2 | AES-256-GCM | 12352.14k | 156771.30k | 600536.56k | 2364060.33k | 3829205.67k | 4008596.82k |

| 3 | ChaCha20-Poly1305 | 4561.57k | 67435.59k | 185287.58k | 472737.11k | 557932.54k | 565346.30k |

| 4 | ChaCha20-Poly1305 | 5406.06k | 79034.59k | 256344.41k | 1439373.99k | 2491817.98k | 2634612.74k |

Other factors that may lead to a performance gain in Unixoid operating systems is kernel TLS (kTLS)[6][7] wherein the TLS-workflow is completely offloaded from the userspace program to kernelspace functions. The kernel used has to support this, it is typically available since Linux kernel version 5.1 (AES-256-GCM) or 5.11 (CHACHA20-POLY1305), or since FreeBSD 13.0[8] and can be queried via the following command

$ lsmod|grep tls

tls 151552 0

Furthermore the application also must have support for kTLS, either built in or by using external libraries such as openssl. Definite usage by programms is tracked via the following counters, wherein TlsTxSw means 91967 served connections used the kTLS-function, but nothing uses it at the moment of querying.

$ cat /proc/net/tls_stat

TlsCurrTxSw 0

TlsCurrRxSw 0

TlsCurrTxDevice 0

TlsCurrRxDevice 0

TlsTxSw 91967

TlsRxSw 0

TlsTxDevice 0

TlsRxDevice 0

TlsDecryptError 0

TlsRxDeviceResync 0

TlsDecryptRetry 0

TlsRxNoPadViolation 0

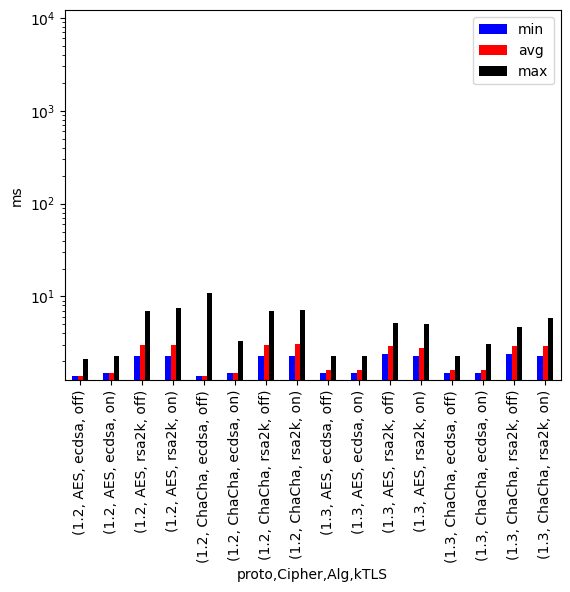

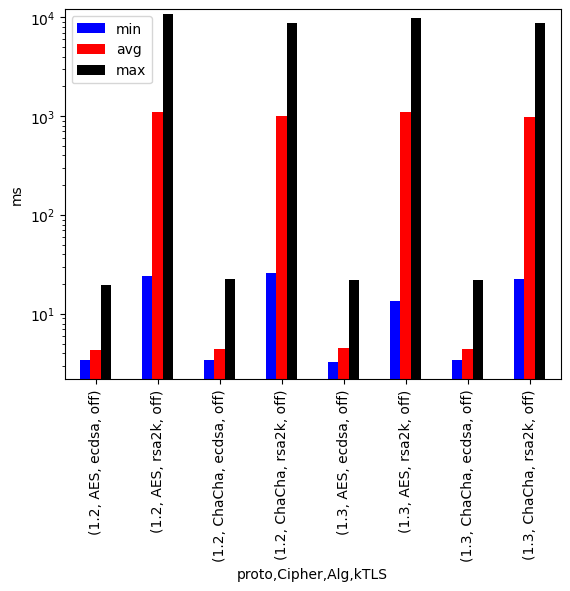

Since the linux kernel 4.13 it is possible to offload TLS to the kernel level (known as kTLS). This should lead to better performance because it saves context switches between the user level TLS implementation and the network stack. We deployed kTLS on various machines, but we could not get a significant performance improvement. Exemplary the biggest difference was produced on a VPS (1 vCore, 1GB RAM), set up with nginx and a 10MB file, having 10 parallel downloads:

| time | throughput | |

|---|---|---|

| kTLS enabled (ø) | 4.57s | 112.52 MBit/s |

| kTLS disabled (ø) | 4.73s | 108.07 MBit/s |

On this machine kTLS only improved the throughput about 3.9%, which does not seem significant to us because it was an virtualized platform.

A more realistic test was run using httperf and a real access log from a production server. The first 10000 connections of the log where queried using 500 parallel connections (i.e. clients) on an Intel Xeon Gold 6150[9] with 96 GB RAM under OpenSuSE 15.6 and on a Raspberry Pi 4[[10]] with 8 GB RAM and CentOS 9 Stream. Both systems used the nginx webserver since this was readily configurable with kTLS. The tests where performed three times and the median was chosen for the evaluation. Note that the graphs are log scaled. It is easily visible, that using ECC-key material instead of RSA-key material is way more influential performance wise than using kTLS, especially on the Rapsberry Pi 4.

AES

AES[11] is a family of block ciphers with a block size of 128 bits and three different key sizes of 128 bits, 192 bits, and 256 bits. It was introduced in 2001 replacing the DES[12] following proven attack vectors against DES. Being a block cipher in AES the data to be encrypted is chunked into blocks of 128 bits in size. Then at its core it uses substitution-permutation network with a fixed number of rounds depending on the key-size, i.e. 10, 12, 14 for 128 bits, 192 bits, 256, respectively. Further in depth description of AES is beyond the scope of this article, please refer to the NIST-documentation or open source implementations like in openssl. Due to the large number of logical operations and mathematical operations encryption can exhibit low performance on lower end CPUs, but hardware implementations either with CPU instructions or offloaded via accompanying ASICs are used to speed up AES yielding good results, please refer to the speed tests in the previous section.

| Pentium 4 Prescott | Haswell | Skylake-X | |||

| 851 * 10^6 instr | 344 * 10^6 instr | 322 * 10^6 instr | |||

| Instruction | share | Instruction | share | Instruction | share |

|---|---|---|---|---|---|

| AND_GPRv_IMMz | 0.0291 | ADD_GPRv_IMMb | 0.0293 | ADD_GPRv_IMMb | 0.0298 |

| LEA_GPRv_AGEN | 0.03 | RET_NEAR | 0.0298 | RET_NEAR | 0.0303 |

| XOR_GPRv_MEMv | 0.0428 | LEA_GPRv_AGEN | 0.0328 | LEA_GPRv_AGEN | 0.0334 |

| MOV_GPRv_MEMv | 0.0491 | JNZ_RELBRb | 0.0373 | JNZ_RELBRb | 0.0379 |

| SHR_GPRv_IMMb | 0.0608 | JZ_RELBRb | 0.0451 | JZ_RELBRb | 0.0459 |

| SHL_GPRv_IMMb_C1r4 | 0.061 | POP_GPRv_58 | 0.0513 | POP_GPRv_58 | 0.0522 |

| MOVZX_GPRv_GPR8 | 0.0667 | PUSH_GPRv_50 | 0.0514 | PUSH_GPRv_50 | 0.0522 |

| MOVZX_GPRv_MEMb | 0.0731 | MOV_GPRv_MEMv | 0.0638 | MOV_GPRv_MEMv | 0.065 |

| MOV_GPRv_GPRv_89 | 0.0781 | TEST_GPRv_GPRv | 0.0712 | TEST_GPRv_GPRv | 0.0724 |

| XOR_GPRv_GPRv_31 | 0.143 | MOV_GPRv_GPRv_89 | 0.1026 | MOV_GPRv_GPRv_89 | 0.1043 |

Not in the table are the instructions AESENCLAST_XMMdq_XMMdq and AESENC_XMMdq_XMMdq with an instruction share of 0.09 % and 1.14 %, respectively. While not that much instructions overall the decreased instruction count for the speedtest run can be clearly seen, resulting in the previously mentioned performance gain when using CPU instructions for AES.

ChaCha20

The ChaCha20 stream cipher[13] uses a set number of rounds of ADD and ROT on numbers in a 4x4 matrix. Therefore vector instructions in CPU can be leveraged to gain a performance boost, see previous section for measured values. Measurement runs with the Intel sde[14] revealed the usage of vector CPU-instructions.

| Pentium 4 Prescott | Haswell | Skylake-X | |||

| 591 * 10^6 instr | 467 * 10^6 instr | 409 * 10^6 instr | |||

| Instruction | share | Instruction | share | Instruction | share |

|---|---|---|---|---|---|

| LEA_GPRv_AGEN | 0.03 | PSHUFD_XMMdq_XMMdq_IMMb | 0.0317 | LEA_GPRv_AGEN | 0.0395 |

| POP_GPRv_58 | 0.0307 | LEA_GPRv_AGEN | 0.0363 | MOV_MEMv_GPRv | 0.0398 |

| TEST_GPRv_GPRv | 0.0319 | MOV_MEMv_GPRv | 0.0366 | POP_GPRv_58 | 0.0455 |

| PUSH_GPRv_50 | 0.0365 | POP_GPRv_58 | 0.0417 | VPADDD_YMMqq_YMMqq_YMMqq | 0.046 |

| MOV_MEMv_GPRv | 0.0489 | PADDD_XMMdq_XMMdq | 0.0423 | VPROLD_YMMu32_MASKmskw_YMMu32_IMM8_AVX512 | 0.046 |

| MOV_GPRv_MEMv | 0.0629 | TEST_GPRv_GPRv | 0.0434 | VPXOR_YMMqq_YMMqq_YMMqq | 0.046 |

| MOV_GPRv_GPRv_89 | 0.0677 | PXOR_XMMdq_XMMdq | 0.0452 | TEST_GPRv_GPRv | 0.0473 |

| ROL_GPRv_IMMb | 0.124 | PUSH_GPRv_50 | 0.0466 | PUSH_GPRv_50 | 0.0507 |

| ADD_GPRv_GPRv_01 | 0.1295 | MOV_GPRv_MEMv | 0.0568 | MOV_GPRv_MEMv | 0.0619 |

| XOR_GPRv_GPRv_31 | 0.1345 | MOV_GPRv_GPRv_89 | 0.091 | MOV_GPRv_GPRv_89 | 0.0992 |

how to: fast and safe SSL handshake

OCSP

The Online Certificate Status Protocol (OCSP) will not be supported by Let's Encypt in future. The stated reason are privacy concerns and extensive resource requirements for providing this service. Theoretically the privacy concerns are solved by using OCSP Stapling. Nevertheless OCSP and OCSP Stapling will not be available to website providers using letsencrypt certificates soon.

Regardless of this Chromium derivates do not use OCSP/CRL by default anyways.

Ciphers

Nowadays only TLSv1.2 and newer should be used. If backwards compatibility is not necessary, it is even better to offer only TLSv1.3. It is recommended to only use the recommended ciphers from the BSI [15][16]. Additionally we would recommend only ChaCha20 with an adequate key length due to the performance increase on devices without hardware acceleration for AES. In general, it is also preferable to switch to elliptic curves, e.g. using NIST-p256 curve, for keys because this also leads to a measurable impact measurable impact.

It is also possible to gain performance improvements, just by configuring the server so that all parts work optimally together.

Most servers load at first the served data into the RAM before delivering.

These memory operations cost time, so it is reasonable to enable the use of `sendfile()`.

Be aware that using the `sendfile` mechanism does not work well, when serving files from network shares among other issues.

This allows servers not to intermediate buffer the data and therefore improves performance.

If possible, offload the TLS stack from the userspace (your server software). A option is to offload the TLS-handling to linux kernel. We do not recommend it because it did not lead to speed improvements . In the case that a TLS-aware network card is available, it seems best to offload the TLS handling to the dedicated hardware.

In general, it is preferable to provide users with the shortest, but complete key chain for your certificate to save time during verification.

Exemplary configuarition

A fast and secure configuration for nginx, assuming small setups, could look like this:

nginx

# For more information on configuration, see:

# * Official English Documentation: http://nginx.org/en/docs/

# * Official Russian Documentation: http://nginx.org/ru/docs/

user nginx;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

# Load dynamic modules. See /usr/share/doc/nginx/README.dynamic.

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

access_log /var/log/nginx/access.log;

include /etc/nginx/mime.types;

default_type application/octet-stream;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

include /etc/nginx/conf.d/*.conf;

server {

listen 80;

listen [::]:80;

listen 443 ssl;

listen [::]:443 ssl;

server_name _;

root /usr/share/nginx/html;

# Load configuration files for the default server block.

include /etc/nginx/default.d/*.conf;

# Prevent nginx HTTP Server Detection

server_tokens off;

ssl_certificate "[path to certificate]";

ssl_certificate_key "[path to key]";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

# only allow TLSv1.3

ssl_protocols TLSv1.3;

# only allow elliptic curves (AES and ChaCha20 for mobile devices)

ssl_conf_command Ciphersuites TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256;

# reduce memcpy by using sendfile

sendfile on;

sendfile_max_chunk 1m; # Limits chunks to 1 Megabytes

# wait until the next package is full, except for the least (we save nagle's timeouts)

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

error_page 404 /404.html;

location = /404.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

}

for those, who are interested

useful links

There are some helpful web services when working with TLS.

Enable kTLS

At first, it is necessary to check if the corresponding kernel module is loaded on your instance, like this:

$ lsmod | grep tls

It is also necessary, that your server software was built with kTLS support. Most times you can see the build-parameters using a commandline parameter like `-v` or `-V`. Given that the preconditions are fulfilled, we can activate kTLS

using nginx:

- Add

ssl_conf_command Options KTLS;to the server section. - Afterwards you could verify it by enabling debug printouts, so you should see lines containing

BIO_get_ktls_sendandSSL_sendfile.

using apache2:

- Apache has no kTLS implementation, possibly due to the low impact. Therefore we just can activate the

sendfile()[17] mechanism by addingEnableSendfile Onto your config. (see the doc)

using lighttpd:

- Add

ssl.openssl.ssl-conf-cmd += ("Options" => "-KTLS")[18] to the configuration.

In general, you should be able to verify the changes using a benchmarking tool like ab or httperf or by taking a look into /proc/net/tls_stat.